动画数据格式#

动画数据是用于在动画相关微服务之间发送头像姿势或动画数据的数据格式。它目前用于将动画数据从动画源(例如 Audio2Face 微服务)发送到动画合成器(例如动画图微服务),并从那里发送到渲染器(例如 Omniverse Renderer 微服务)。该格式可以容纳单个带有相应音频的头像姿势帧,或者带有相应音频的整个动画序列。未来,此格式可能会扩展以支持相机姿势等。

动画数据服务#

动画数据服务提供两个 RPC,用于将动画数据从客户端推送到服务器,或者反过来将动画数据从服务器拉取到客户端。

nvidia_ace.services.animation_data.v1.proto

syntax = "proto3";

package nvidia_ace.services.animation_data.v1;

import "nvidia_ace.animation_data.v1.proto";

import "nvidia_ace.animation_id.v1.proto";

import "nvidia_ace.status.v1.proto";

// 2 RPC exist to provide a stream of animation data

// The RPC to implement depends on if the part of the service

// is a client or a server.

// E.g.: In the case of Animation Graph Microservice, we implement both RPCs.

// One to receive and one to send.

service AnimationDataService {

// When the service creating the animation data is a client from the service receiving them

// This push RPC must be used.

// An example for that is Audio2Face Microservice creating animation data and sending them

// to Animation Graph Microservice

rpc PushAnimationDataStream(stream nvidia_ace.animation_data.v1.AnimationDataStream)

returns (nvidia_ace.status.v1.Status) {}

// When the service creating the animation data is a server from the service receiving them

// This pull RPC must be used.

// An example for that is the Omniverse Renderer Microservice requesting animation data to the

// Animation Graph Microservice.

rpc PullAnimationDataStream(nvidia_ace.animation_id.v1.AnimationIds)

returns (stream nvidia_ace.animation_data.v1.AnimationDataStream) {}

}

//nvidia_ace.services.animation_data.v1

//v1.0.0

动画数据流#

用于推送和拉取动画数据的两个 RPC 都实现了相同的动画数据流协议。动画数据负载是受 USDA 启发的,但它包含一些更改以改进流式传输。例如,头像姿势数据已在标头中分离出来,该标头仅在流的开头发送一次,以及一个或多个在动画数据标头之后发送的动画数据块。

nvidia_ace.animation_data.v1.proto

syntax = "proto3";

package nvidia_ace.animation_data.v1;

import "nvidia_ace.animation_id.v1.proto";

import "nvidia_ace.audio.v1.proto";

import "nvidia_ace.status.v1.proto";

import "google/protobuf/any.proto";

// IMPORTANT NOTE: this is an AnimationDataStreamHeader WITH ID

// A similar AudioStreamHeader exist in nvidia_ace.controller.v1.proto

// but that one does NOT contain IDs

message AnimationDataStreamHeader {

nvidia_ace.animation_id.v1.AnimationIds animation_ids = 1;

// This is required to identify from which animation source (e.g. A2F) the

// request originates. This allows us to map the incoming animation data

// stream to the correct pose provider animation graph node. The animation

// source MSs (e.g. A2F MS) should populate this with their name. (e.g. A2F).

// Example Value: "A2F MS"

optional string source_service_id = 2;

// Metadata of the audio buffers. This defines the audio clip properties

// at the beginning the streaming process.

optional nvidia_ace.audio.v1.AudioHeader audio_header = 3;

// Metadata containing the blendshape and joints names.

// This defines the names of the blendshapes and joints flowing though a stream.

optional nvidia_ace.animation_data.v1.SkelAnimationHeader

skel_animation_header = 4;

// Animation data streams use time codes (`time_code`) to define the temporal

// position of audio (e.g. `AudioWithTimeCode`), animation key frames (e.g.

// `SkelAnimation`), etc. relative to the beginning of the stream. The unit of

// `time_code` is seconds. In addition, the `AnimationDataStreamHeader` also

// provides the `start_time_code_since_epoch` field, which defines the

// absolute start time of the animation data stream. This start time is stored

// in seconds elapsed since the Unix time epoch.

double start_time_code_since_epoch = 5;

// A generic metadata field to attach use case specific data (e.g. session id,

// or user id?) map<string, string> metadata = 6; map<string,

// google.protobuf.Any> metadata = 6;

}

// This message represent each message of a stream of animation data.

message AnimationDataStream {

oneof stream_part {

// The header must be sent as the first message.

AnimationDataStreamHeader animation_data_stream_header = 1;

// Then one or more animation data message must be sent.

nvidia_ace.animation_data.v1.AnimationData animation_data = 2;

// The status must be sent last and may be sent in between.

nvidia_ace.status.v1.Status status = 3;

}

}

message AnimationData {

optional SkelAnimation skel_animation = 1;

optional AudioWithTimeCode audio = 2;

optional Camera camera = 3;

// Metadata such as emotion aggregates, etc...

map<string, google.protobuf.Any> metadata = 4;

}

message AudioWithTimeCode {

// The time code is relative to the `start_time_code_since_epoch`.

// Example Value: 0.0 (for the very first audio buffer flowing out of a service)

double time_code = 1;

// Audio Data in bytes, for how to interpret these bytes you need to refer to

// the audio header.

bytes audio_buffer = 2;

}

message SkelAnimationHeader {

// Names of the blendshapes only sent once in the header

// The position of these names is the same as the position of the values

// of the blendshapes messages

// As an example if the blendshape names are ["Eye Left", "Eye Right", "Jaw"]

// Then when receiving blendshape data over the streaming process

// E.g.: [0.1, 0.5, 0.2] & timecode = 0.0

// The pairing will be for timecode=0.0, "Eye Left"=0.1, "Eye Right"=0.5, "Jaw"=0.2

repeated string blend_shapes = 1;

// Names of the joints only sent once in the header

repeated string joints = 2;

}

message SkelAnimation {

// Time codes must be strictly monotonically increasing.

// Two successive SkelAnimation messages must not have overlapping time code

// ranges.

repeated FloatArrayWithTimeCode blend_shape_weights = 1;

repeated Float3ArrayWithTimeCode translations = 2;

repeated QuatFArrayWithTimeCode rotations = 3;

repeated Float3ArrayWithTimeCode scales = 4;

}

message Camera {

repeated Float3WithTimeCode position = 1;

repeated QuatFWithTimeCode rotation = 2;

repeated FloatWithTimeCode focal_length = 3;

repeated FloatWithTimeCode focus_distance = 4;

}

message FloatArrayWithTimeCode {

double time_code = 1;

repeated float values = 2;

}

message Float3ArrayWithTimeCode {

double time_code = 1;

repeated Float3 values = 2;

}

message QuatFArrayWithTimeCode {

double time_code = 1;

repeated QuatF values = 2;

}

message Float3WithTimeCode {

double time_code = 1;

Float3 value = 2;

}

message QuatFWithTimeCode {

double time_code = 1;

QuatF value = 2;

}

message FloatWithTimeCode {

double time_code = 1;

float value = 2;

}

message QuatF {

float real = 1;

float i = 2;

float j = 3;

float k = 4;

}

message Float3 {

float x = 1;

float y = 2;

float z = 3;

}

//nvidia_ace.animation_data.v1

//v1.0.0

nvidia_ace.animation_id.v1.proto

syntax = "proto3";

package nvidia_ace.animation_id.v1;

message AnimationIds {

// This is required to track a single animation source (e.g. A2X) request

// through the animation pipeline. This is going to allow e.g. the controller

// to stop a request after it has been sent to the animation compositor (e.g.

// animation graph).

// Example Value: "8b09637f-737e-488c-872e-e367e058aa15"

// Note1: The above value is an example UUID (https://en.wikipedia.org/wiki/Universally_unique_identifier)

// Note2: You don't need to provide specifically a UUID, any text should work, however UUID are recommended

// for their low chance of collision

string request_id = 1;

// The stream id is shared across the animation pipeline and identifies all

// animation data streams that belong to the same stream. Thus, there will be

// multiple request all belonging to the same stream. Different user sessions,

// will usually result in a new stream id. This is required for stateful MSs

// (e.g. anim graph) to map different requests to the same stream.

// Example Value: "17f1fefd-3812-4211-94e8-7af1ef723d7f"

// Note1: The above value is an example UUID (https://en.wikipedia.org/wiki/Universally_unique_identifier)

// Note2: You don't need to provide specifically a UUID, any text should work, however UUID are recommended

// for their low chance of collision

string stream_id = 2;

// This identifies the target avatar or object the animation data applies to.

// This is required when there are multiple avatars or objects in the scene.

// Example Value: "AceModel"

string target_object_id = 3;

}

//nvidia_ace.animation_id.v1

//v1.0.0

nvidia_ace.audio.v1.proto

syntax = "proto3";

package nvidia_ace.audio.v1;

message AudioHeader {

enum AudioFormat { AUDIO_FORMAT_PCM = 0; }

// Example value: AUDIO_FORMAT_PCM

AudioFormat audio_format = 1;

// Currently only mono sound must be supported.

// Example value: 1

uint32 channel_count = 2;

// Defines the sample rate of the provided audio data

// Example value: 16000

uint32 samples_per_second = 3;

// Currently only 16 bits per sample must be supported.

// Example value: 16

uint32 bits_per_sample = 4;

}

//nvidia_ace.audio.v1

//v1.0.0

nvidia_ace.status.v1.proto

syntax = "proto3";

package nvidia_ace.status.v1;

// This status message indicates the result of an operation

// Refer to the rpc using it for more information

message Status {

enum Code {

SUCCESS = 0;

INFO = 1;

WARNING = 2;

ERROR = 3;

}

// Type of message returned by the service

// Example value: SUCCESS

Code code = 1;

// Message returned by the service

// Example value: "Audio processing completed successfully!"

string message = 2;

}

//nvidia_ace.status.v1

//v1.0.0

标准骨骼绑定#

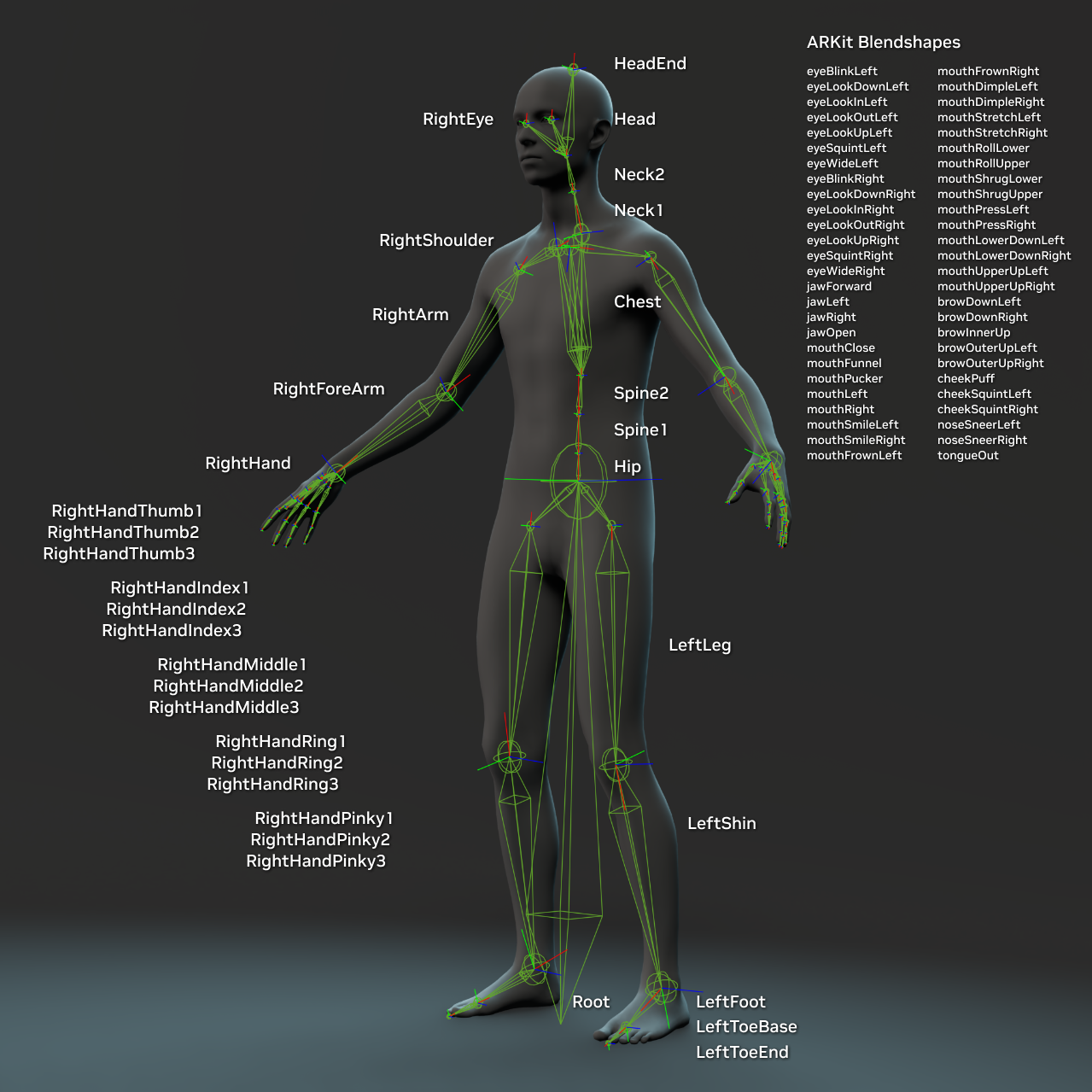

动画数据格式非常通用,并且在技术上可以支持各种骨骼绑定。为了提高动画微服务的互操作性,各种动画微服务使用标准化的双足角色骨骼绑定。下图说明了骨骼绑定的拓扑结构和关节命名。

标准双足骨骼绑定以及相应的关节拓扑结构和命名#

骨骼绑定的面部部分基于 Apple 的 ARKit 定义的 blendshape。

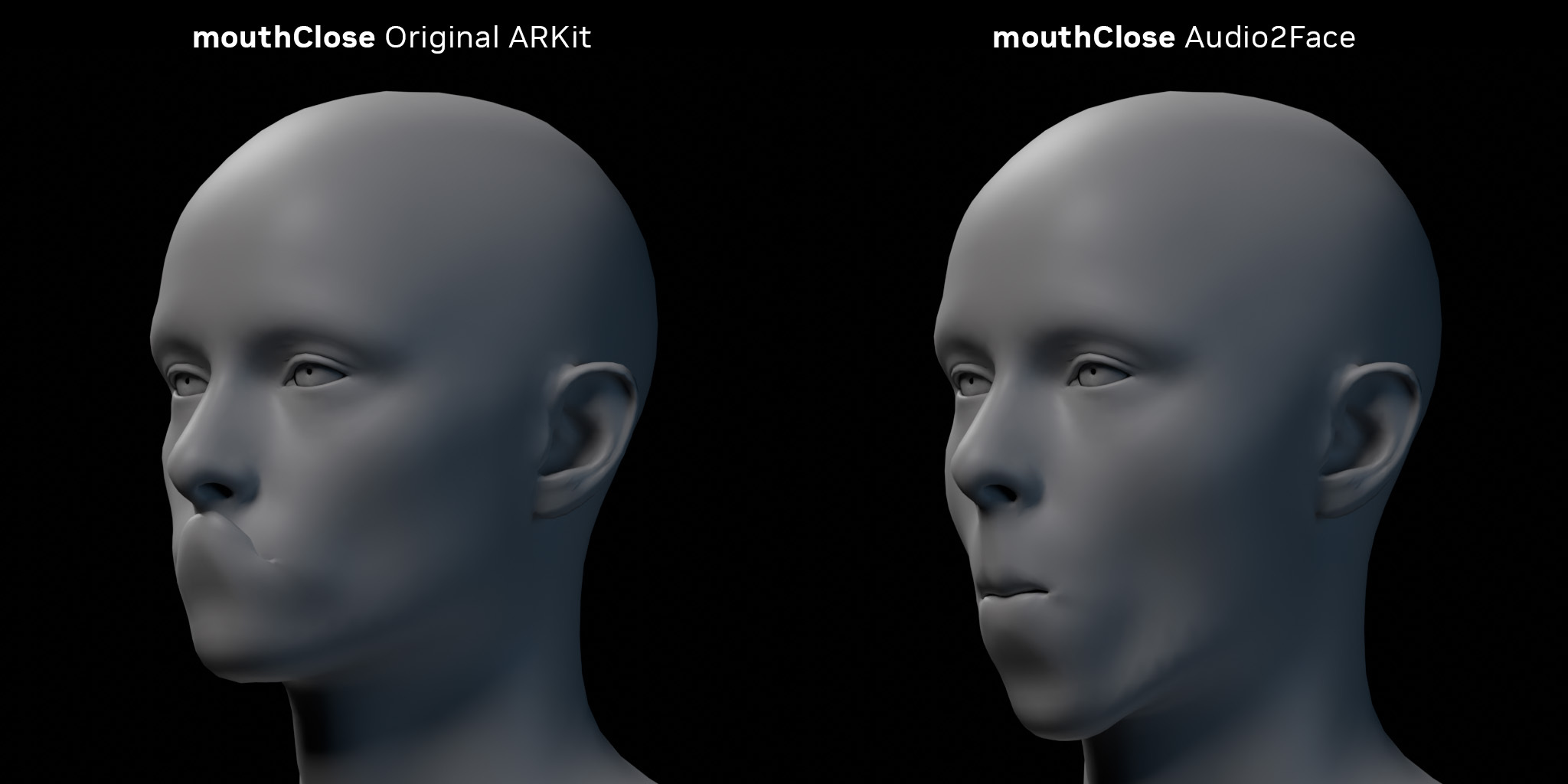

警告

blendshape mouthClose 的定义偏离了标准 ARKit 版本。该形状包括下颌的张开,如下图所示。

坐标系#

动画数据中使用的坐标系定义为 Y 轴指向上方,X 轴指向左侧(从头像的角度看),Z 轴指向头像的前方。