网络运营商#

验证 DGX 系统上的 NVIDIA Mellanox OFED 软件包版本是否与DGX 操作系统发行说明中列出的版本匹配。

1 # cmsh 2 % device 3 % pexec -c dgx-a100 -j "ofed_info -s" 4 [dgx01..dgx04] 5 MLNX_OFED_LINUX-23.10-0.5.5.0:

必须识别计算 Fabric 中使用的正确的 InfiniBand 接口,并检查其运行状态。如前所述,使用了 mlx5_0、mlx5_2、mlx5_6 和 mlx5_8,应验证它们是否处于工作状态。每个节点上的每个接口都应为 State: Active,Physical stat: LinkUp 和 Link layer: InfiniBand。

使用以下命令验证接口是否正常工作

1 [basepod-head1->device]% pexec -c dgx-a100 -j "for i in 0 2 6 8; do ibstat -d \ mlx5_${i} | grep -i \"mlx5_\\|state\\|infiniband\"; done" 2 [dgx01..dgx04] 3 CA 'mlx5_0' 4 State: Active 5 Physical state: LinkUp 6 Link layer: InfiniBand 7 CA 'mlx5_2' 8 State: Active 9 Physical state: LinkUp 10 Link layer: InfiniBand 11 CA 'mlx5_6' 12 State: Active 13 Physical state: LinkUp 14 Link layer: InfiniBand 15 CA 'mlx5_8' 16 State: Active 17 Physical state: LinkUp 18 Link layer: InfiniBand`

检查 SRIOV 接口状态。

NUM_OF_VFS 应设置为 8。

SRIOV_EN 应为 True(1)。

Link_TYPE_P1 应为 IB(1)。

在本例中,仅 Link_TYPE_P1 设置正确。其他值需要在下一步中设置。

1 [basepod-head1->device]% pexec -c dgx-a100 -j "for i in 0 2 6 8; do mst start; \ mlxconfig -d /dev/mst/mt4123_pciconf${i} q; done | grep -e \ \"SRIOV_EN\\|LINK_TYPE\\|NUM_OF_VFS\"" 2 [dgx01..dgx04] 3 NUM_OF_VFS 0 4 SRIOV_EN False(0) 5 LINK_TYPE_P1 IB(1) 6 NUM_OF_VFS 0 7 SRIOV_EN False(0) 8 LINK_TYPE_P1 IB(1) 9 NUM_OF_VFS 0 10 SRIOV_EN False(0) 11 LINK_TYPE_P1 IB(1) 12 NUM_OF_VFS 0 13 SRIOV_EN False(0) 14 LINK_TYPE_P1 IB(1)

启用 SRIOV 并将每个接口的 NUM_OF_VFS 设置为 8。

由于 Link_TYPE_P1 已正确设置,因此下面仅设置其他两个值。

1[basepod-head1->device]% pexec -c dgx-a100 -j "for i in 0 2 6 8; do mst start; \ mlxconfig -d /dev/mst/mt4123_pciconf${i} -y set SRIOV_EN=1 NUM_OF_VFS=8; done" 2[dgx01..dgx04] 3Starting MST (Mellanox Software Tools) driver set 4Loading MST PCI module - Success 5[warn] mst_pciconf is already loaded, skipping 6Create devices 7Unloading MST PCI module (unused) - Success 8 9Device #1: 10---------- 11 12Device type: ConnectX6 13Name: MCX653105A-HDA_Ax 14Description: ConnectX-6 VPI adapter card; HDR IB (200Gb/s) and 200GbE; single-port QSFP56; PCIe4.0 x16; tall bracket; ROHS R6 15Device: /dev/mst/mt4123_pciconf0 16 17Configurations: Next Boot New 18 SRIOV_EN False(0) True(1) 19 NUM_OF_VFS 0 8 20 21Apply new Configuration? (y/n) [n] : y 22Applying... Done! 23-I- Please reboot machine to load new configurations. 24. . . some output omitted . . .

重启 DGX 节点以加载配置。

% reboot -c dgx-a100

等待 DGX 节点启动 (UP) 后再继续下一步。

1% list -c dgx-a100 -f hostname:20,category:10,ip:20,status:10 2hostname (key) category ip status 3-------------------- ---------- -------------------- ---------- 4dgx01 dgx-a100 10.184.71.11 [ UP + 5dgx02 dgx-a100 10.184.71.12 [ UP + 6dgx03 dgx-a100 10.184.71.13 [ UP + 7dgx04 dgx-a100 10.184.71.14 [ UP +

在 InfiniBand 端口上配置八个 SRIOV VF。

[basepod-head1->device]% pexec -c dgx-a100 -j "for i in 0 2 6 8; do echo 8 > \ /sys/class/infiniband/mlx5_${i}/device/sriov_numvfs; done"

在主头节点上,加载 Kubernetes 环境模块。

# module load kubernetes/default/1.27.11-150500.1.1添加并安装 Network Operator Helm repo。

1# helm repo add nvidia-networking https://mellanox.github.io/network-operator 2"nvidia-networking" has been added to your repositories 3 4# helm repo update 5Hang tight while we grab the latest from your chart repositories... 6...Successfully got an update from the "nvidia-networking" chart repository 7...Successfully got an update from the "prometheus-community" chart repository 8...Successfully got an update from the "nvidia" chart repository 9Update Complete. ⎈Happy Helming!⎈

创建目录 ./network-operator。

# mkdir ./network-operator创建用于 Helm 安装 Network Operator 的 values.yaml 文件。

1# vi ./network-operator/values.yaml 2 3nfd: 4 enabled: true 5sriovNetworkOperator: 6 enabled: true 7 8# NicClusterPolicy CR values: 9deployCR: true 10ofedDriver: 11 deploy: false 12rdmaSharedDevicePlugin: 13 deploy: false 14sriovDevicePlugin: 15 deploy: false 16 17secondaryNetwork: 18 deploy: true 19 multus: 20 deploy: true 21 cniPlugins: 22 deploy: true 23 ipamPlugin: 24 deploy: true

创建 sriov-ib-network-node-policy.yaml 文件。

1# vi ./network-operator/sriov-ib-network-node-policy.yaml 2 3apiVersion: sriovnetwork.openshift.io/v1 4kind: SriovNetworkNodePolicy 5metadata: 6 name: ibp12s0 7 namespace: network-operator 8spec: 9 deviceType: netdevice 10 nodeSelector: 11 feature.node.kubernetes.io/network-sriov.capable: "true" 12 nicSelector: 13 vendor: "15b3" 14 pfNames: ["ibp12s0"] 15 linkType: ib 16 isRdma: true 17 numVfs: 8 18 priority: 90 19 resourceName: resibp12s0 20 21--- 22apiVersion: sriovnetwork.openshift.io/v1 23kind: SriovNetworkNodePolicy 24metadata: 25 name: ibp75s0 26 namespace: network-operator 27spec: 28 deviceType: netdevice 29 nodeSelector: 30 feature.node.kubernetes.io/network-sriov.capable: "true" 31 nicSelector: 32 vendor: "15b3" 33 pfNames: ["ibp75s0"] 34 linkType: ib 35 isRdma: true 36 numVfs: 8 37 priority: 90 38 resourceName: resibp75s0 39 40--- 41apiVersion: sriovnetwork.openshift.io/v1 42kind: SriovNetworkNodePolicy 43metadata: 44 name: ibp141s0 45 namespace: network-operator 46spec: 47 deviceType: netdevice 48 nodeSelector: 49 feature.node.kubernetes.io/network-sriov.capable: "true" 50 nicSelector: 51 vendor: "15b3" 52 pfNames: ["ibp141s0"] 53 linkType: ib 54 isRdma: true 55 numVfs: 8 56 priority: 90 57 resourceName: resibp141s0 58 59--- 60apiVersion: sriovnetwork.openshift.io/v1 61kind: SriovNetworkNodePolicy 62metadata: 63 name: ibp186s0 64 namespace: network-operator 65spec: 66 deviceType: netdevice 67 nodeSelector: 68 feature.node.kubernetes.io/network-sriov.capable: "true" 69 nicSelector: 70 vendor: "15b3" 71 pfNames: ["ibp186s0"] 72 linkType: ib 73 isRdma: true 74 numVfs: 8 75 priority: 90 76 resourceName: resibp186s0

创建 sriovibnetwork.yaml 文件。

1# vi ./network-operator/sriovibnetwork.yaml 2 3apiVersion: sriovnetwork.openshift.io/v1 4kind: SriovIBNetwork 5metadata: 6 name: ibp12s0 7 namespace: network-operator 8spec: 9 ipam: | 10 { 11 "type": "whereabouts", 12 "datastore": "kubernetes", 13 "kubernetes": { 14 "kubeconfig": "/etc/cni/net.d/whereabouts.d/whereabouts.kubeconfig" 15 }, 16 "range": "192.168.1.0/24", 17 "log_file": "/var/log/whereabouts.log", 18 "log_level": "info" 19 } 20 resourceName: resibp12s0 21 linkState: enable 22 networkNamespace: default 23 24--- 25apiVersion: sriovnetwork.openshift.io/v1 26kind: SriovIBNetwork 27metadata: 28 name: ibp75s0 29 namespace: network-operator 30spec: 31 ipam: | 32 { 33 "type": "whereabouts", 34 "datastore": "kubernetes", 35 "kubernetes": { 36 "kubeconfig": "/etc/cni/net.d/whereabouts.d/whereabouts.kubeconfig" 37 }, 38 "range": "192.168.2.0/24", 39 "log_file": "/var/log/whereabouts.log", 40 "log_level": "info" 41 } 42 resourceName: resibp75s0 43 linkState: enable 44 networkNamespace: default 45 46--- 47apiVersion: sriovnetwork.openshift.io/v1 48kind: SriovIBNetwork 49metadata: 50 name: ibpi141s0 51 namespace: network-operator 52spec: 53 ipam: | 54 { 55 "type": "whereabouts", 56 "datastore": "kubernetes", 57 "kubernetes": { 58 "kubeconfig": "/etc/cni/net.d/whereabouts.d/whereabouts.kubeconfig" 59 }, 60 "range": "192.168.3.0/24", 61 "log_file": "/var/log/whereabouts.log", 62 "log_level": "info" 63 } 64 resourceName: resibp141s0 65 linkState: enable 66 networkNamespace: default 67 68--- 69apiVersion: sriovnetwork.openshift.io/v1 70kind: SriovIBNetwork 71metadata: 72 name: ibp186s0 73 namespace: network-operator 74spec: 75 ipam: | 76 { 77 "type": "whereabouts", 78 "datastore": "kubernetes", 79 "kubernetes": { 80 "kubeconfig": "/etc/cni/net.d/whereabouts.d/whereabouts.kubeconfig" 81 }, 82 "range": "192.168.4.0/24", 83 "log_file": "/var/log/whereabouts.log", 84 "log_level": "info" 85 } 86 resourceName: resibp186s0 87 linkState: enable 88 networkNamespace: default

部署配置文件。

1 # kubectl apply -f ./network-operator/sriov-ib-network-node-policy.yaml 2 sriovnetworknodepolicy.sriovnetwork.openshift.io/ibp12s0 created 3 sriovnetworknodepolicy.sriovnetwork.openshift.io/ibp75s0 created 4 sriovnetworknodepolicy.sriovnetwork.openshift.io/ibp141s0 created 5 sriovnetworknodepolicy.sriovnetwork.openshift.io/ibp186s0 created 6 7 # kubectl apply -f ./network-operator/sriovibnetwork.yaml 8 sriovibnetwork.sriovnetwork.openshift.io/ibp12s0 created 9 sriovibnetwork.sriovnetwork.openshift.io/ibp75s0 created 10 sriovibnetwork.sriovnetwork.openshift.io/ibpi141s0 created 11 sriovibnetwork.sriovnetwork.openshift.io/ibp186s0 created

部署 mpi-operator。

1 # kubectl apply -f https://raw.githubusercontent.com/kubeflow/mpi-\ operator/master/deploy/v2beta1/mpi-operator.yaml 2 namespace/mpi-operator created 3 customresourcedefinition.apiextensions.k8s.io/mpijobs.kubeflow.org created 4 serviceaccount/mpi-operator created 5 clusterrole.rbac.authorization.k8s.io/kubeflow-mpijobs-admin created 6 clusterrole.rbac.authorization.k8s.io/kubeflow-mpijobs-edit created 7 clusterrole.rbac.authorization.k8s.io/kubeflow-mpijobs-view created 8 clusterrole.rbac.authorization.k8s.io/mpi-operator created 9 clusterrolebinding.rbac.authorization.k8s.io/mpi-operator created 10 deployment.apps/mpi-operator created

将 Network Operator /opt/cni/bin 目录复制到 /cm/shared,头节点将在此处访问它。

1 # ssh dgx01 2 # cp -r /opt/cni/bin /cm/shared/dgx_opt_cni_bin 3 # exit

创建 network-validation.yaml 文件并运行简单的验证测试。

1 # vi network-operator/network-validation.yaml 2 3 apiVersion: v1 4 kind: Pod 5 metadata: 6 name: network-validation-pod 7 spec: 8 containers: 9 - name: network-validation-pod 10 image: docker.io/deepops/nccl-tests:latest 11 imagePullPolicy: IfNotPresent 12 command: 13 - sh 14 - -c 15 - sleep inf 16 securityContext: 17 capabilities: 18 add: ["IPC_LOCK"] 19 resources: 20 requests: 21 nvidia.com/resibp75s0: "1" 22 nvidia.com/resibp186s0: "1" 23 nvidia.com/resibp12s0: "1" 24 nvidia.com/resibp141s0: "1" 25 limits: 26 nvidia.com/resibp75s0: "1" 27 nvidia.com/resibp186s0: "1" 28 nvidia.com/resibp12s0: "1" 29 nvidia.com/resibp141s0: "1"

应用 network-validation.yaml 文件。

1 # kubectl apply -f ./network-operator/network-validation.yaml 2 pod/network-validation-pod created

如果 Pod 成功运行且未给出任何错误,则表示已通过网络验证测试。

运行多节点 NCCL 测试。

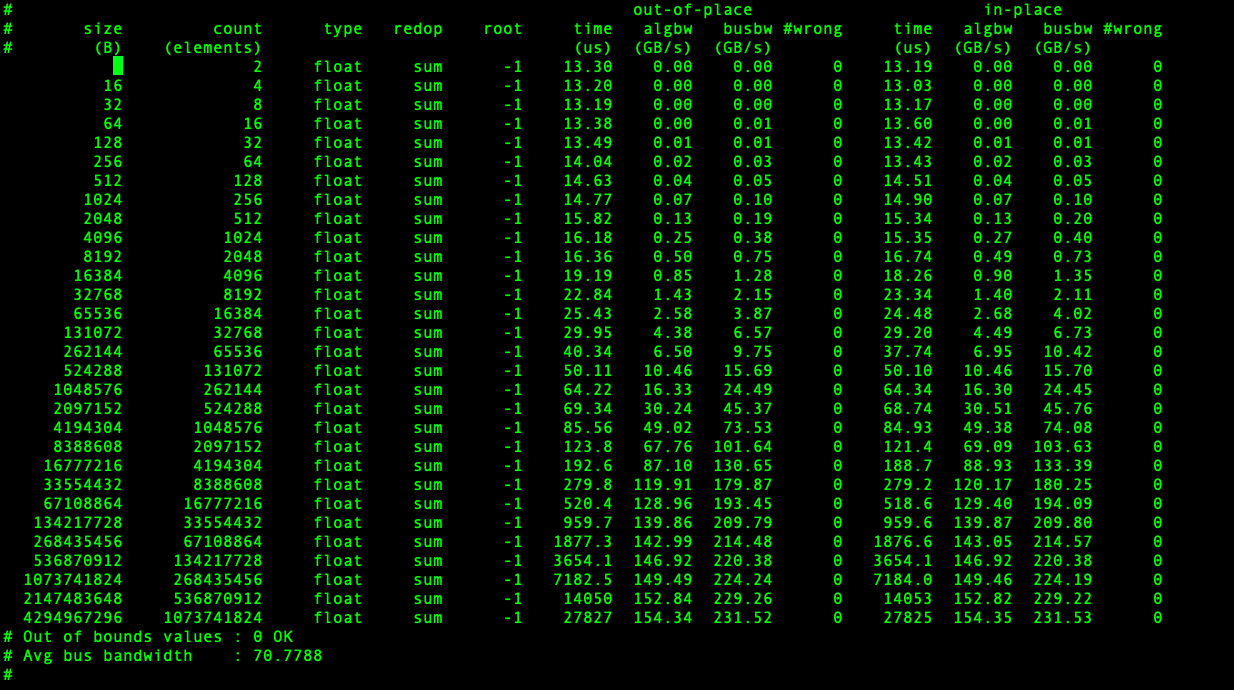

NVIDIA Collective Communication Library (NCCL) 实现了针对 NVIDIA GPU 和网络优化的多 GPU 和多节点通信原语,这是许多 AI/ML 训练和深度学习应用的基础。成功运行多节点 NCCL 测试是一个很好的指标,表明 GPU 之间的多节点 MPI 和 NCCL 通信运行正常。在 ./network-operator 目录中创建 nccl_test.yaml 文件。

1 # vi ./network-operator/nccl_test.yaml 2 3 apiVersion: kubeflow.org/v2beta1 4 kind: MPIJob 5 metadata: 6 name: nccltest 7 spec: 8 slotsPerWorker: 8 9 runPolicy: 10 cleanPodPolicy: Running 11 mpiReplicaSpecs: 12 Launcher: 13 replicas: 1 14 template: 15 spec: 16 containers: 17 - image: docker.io/deepops/nccl-tests:latest 18 name: nccltest 19 imagePullPolicy: IfNotPresent 20 command: 21 - sh 22 - "-c" 23 - | 24 /bin/bash << 'EOF' 25 26 mpirun --allow-run-as-root -np 4 -bind-to none -map-by slot -x NCCL_DEBUG=INFO -x NCCL_DEBUG_SUBSYS=NET -x NCCL_ALGO=RING -x NCCL_IB_DISABLE=0 -x LD_LIBRARY_PATH -x PATH -mca pml ob1 -mca btl self,tcp -mca btl_tcp_if_include 192.168.0.0/16 -mca oob_tcp_if_include 172.29.0.0/16 /nccl_tests/build/all_reduce_perf -b 8 -e 4G -f2 -g 1 27 28 EOF 29 Worker: 30 replicas: 4 31 template: 32 metadata: 33 spec: 34 containers: 35 - image: docker.io/deepops/nccl-tests:latest 36 name: nccltest 37 imagePullPolicy: IfNotPresent 38 securityContext: 39 capabilities: 40 add: ["IPC_LOCK"] 41 resources: 42 limits: 43 nvidia.com/resibp12s0: "1" 44 nvidia.com/resibp75s0: "1" 45 nvidia.com/resibp141s0: "1" 46 nvidia.com/resibp186s0: "1" 47 nvidia.com/gpu: 8

运行 nccl_test 文件。

1 # kubectl apply -f ./network-operator/nccl_test.yaml 2 mpijob.kubeflow.org/nccltest created 3 root@basepod-head1:~# 4 # kubectl get pods 5 NAME READY STATUS RESTARTS AGE 6 nccltest-launcher-9pp28 1/1 Running 0 3m6s 7 nccltest-worker-0 1/1 Running 0 3m6s 8 nccltest-worker-1 1/1 Running 0 3m6s 9 nccltest-worker-2 1/1 Running 0 3m6s 10 nccltest-worker-3 1/1 Running 0 3m6s

要查看日志,请运行 kubectl logs nccltest-launcher-<ID>。 示例如下。