Cumulus Linux 部署指南,适用于 VMware NSX-T

VMware NSX-T 提供了一个敏捷的软件定义基础设施,用于构建云原生应用程序环境。它旨在提供自动化、简化的操作、网络和安全性。NSX-T 支持各种类型的环境,如多 hypervisor、裸机工作负载、混合/公共云等。它充当 VMware 虚拟化 Overlay 解决方案的控制平面、数据平面和管理平面。

VMware 虚拟化环境要求所有虚拟和物理元素(如 ESXi hypervisor 和 NSX Edge)通过底层 IP Fabric 相互通信。NVIDIA Spectrum 交换机利用 Spectrum ASIC 提供一流的底层硬件,速度从 1 到 400Gbps。借助 NVIDIA Cumulus Linux 操作系统软件,可以轻松配置底层 Fabric,以确保 VMware NSX-T 正常运行。

本指南展示了 VMware NSX-T 部署的一些最常见用例所需的物理基础设施配置。底层 Fabric 配置基于 Cumulus Linux 5.X,使用 NVIDIA 用户体验 (NVUE) CLI。

我们仅关注 VM 到 VM 和 VM 到 BM 的用户流量。因此,配置中未涵盖管理、vMotion 和其他网络。

本指南介绍了以下场景

为了完成上述场景,我们使用以下特性和协议

- 多机箱链路聚合 - MLAG,用于主动-主动物理二层连接

- 虚拟路由器冗余 - VRR 和 VRRP,用于主动-主动和冗余的三层网关

- 边界网关协议 - BGP,用于所有物理和逻辑元素之间的底层 IP Fabric 连接。我们使用 Auto BGP 和 BGP Unnumbered,以实现更快、更轻松的 Fabric 配置。这是首选的最佳实践。

- 虚拟可扩展 LAN - VXLAN,用于 Overlay 封装数据平面。

- 以太网虚拟专用网络 - EVPN 控制平面,用于为 ESXi 主机提供 EVPN 二层扩展。

本指南未涵盖 NSX-T 配置。请查看此 操作指南,了解一些示例。

有关 VMware NSX-T 特定设计、安装和配置的更多信息,请查看以下资源

以下是本指南使用的参考拓扑。可以添加新设备以匹配不同的场景。

机架 1 – MLAG 中的两台 NVIDIA 交换机 + 一台以主动-主动绑定方式连接的 ESXi hypervisor

机架 2 – MLAG 中的两台 NVIDIA 交换机 + 一台以主动-主动绑定方式连接的 ESXi hypervisor

物理连接

cumulus@leaf01:mgmt:~$ net show lldp

LocalPort Speed Mode RemoteHost RemotePort

--------- ----- ------- --------------- -----------------

eth0 1G Mgmt oob-mgmt-switch swp10

swp1 1G Default esxi01 44:38:39:00:00:32

swp49 1G Default leaf02 swp49

swp50 1G Default leaf02 swp50

swp51 1G Default spine01 swp1

swp52 1G Default spine02 swp1

cumulus@leaf02:mgmt:~$ net show lldp

LocalPort Speed Mode RemoteHost RemotePort

--------- ----- ------- --------------- -----------------

eth0 1G Mgmt oob-mgmt-switch swp11

swp1 1G Default esxi01 44:38:39:00:00:38

swp49 1G Default leaf01 swp49

swp50 1G Default leaf01 swp50

swp51 1G Default spine01 swp2

swp52 1G Default spine02 swp2

cumulus@leaf03:mgmt:~$ net show lldp

LocalPort Speed Mode RemoteHost RemotePort

--------- ----- ------- --------------- -----------------

eth0 1G Mgmt oob-mgmt-switch swp12

swp1 1G Default esxi03 44:38:39:00:00:3e

swp49 1G Default leaf04 swp49

swp50 1G Default leaf04 swp50

swp51 1G Default spine01 swp3

swp52 1G Default spine02 swp3

cumulus@leaf04:mgmt:~$ net show lldp

LocalPort Speed Mode RemoteHost RemotePort

--------- ----- ------- --------------- -----------------

eth0 1G Mgmt oob-mgmt-switch swp13

swp1 1G Default esxi03 44:38:39:00:00:44

swp49 1G Default leaf03 swp49

swp50 1G Default leaf03 swp50

swp51 1G Default spine01 swp4

swp52 1G Default spine02 swp4

cumulus@spine01:mgmt:~$ net show lldp

LocalPort Speed Mode RemoteHost RemotePort

--------- ----- ------- --------------- ----------

eth0 1G Mgmt oob-mgmt-switch swp14

swp1 1G Default leaf01 swp51

swp2 1G Default leaf02 swp51

swp3 1G Default leaf03 swp51

swp4 1G Default leaf04 swp51

cumulus@spine02:mgmt:~$ net show lldp

LocalPort Speed Mode RemoteHost RemotePort

--------- ----- ------- --------------- ----------

eth0 1G Mgmt oob-mgmt-switch swp15

swp1 1G Default leaf01 swp52

swp2 1G Default leaf02 swp52

swp3 1G Default leaf03 swp52

swp4 1G Default leaf04 swp52

MTU 配置

VMware 建议在所有虚拟和物理网络元素上配置巨型 MTU (9KB) 端到端。在 VMkernel 端口、虚拟交换机 (VDS)、VDS 端口组、N-VDS 和底层物理网络上。Geneve 封装至少需要 1600B 的 MTU(但扩展选项需要 1700B)。VMware 建议为整个网络路径使用至少 9000 字节的 MTU。这提高了存储、vSAN、vMotion、NFS 和 vSphere Replication 的吞吐量。

使用以下命令,您可以检查交换机物理接口的 MTU 设置。默认情况下,Cumulus Linux 上的所有接口都配置了 MTU 9216,无需额外配置。

cumulus@leaf01:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

--------- ----- ----- ----- --------------- ----------------- -------- -----------------------------

+ eth0 1500 1G up oob-mgmt-switch swp10 eth IP Address: 192.168.200.11/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ swp1 9216 1G up esxi01 44:38:39:00:00:32 swp

+ swp49 9216 1G up leaf02 swp49 swp

+ swp50 9216 1G up leaf02 swp50 swp

+ swp51 9216 1G up spine01 swp1 swp

+ swp52 9216 1G up spine02 swp1 swp

cumulus@leaf02:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

--------- ----- ----- ----- --------------- ----------------- -------- -----------------------------

+ eth0 1500 1G up oob-mgmt-switch swp11 eth IP Address: 192.168.200.12/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ swp1 9216 1G up esxi01 44:38:39:00:00:38 swp

+ swp49 9216 1G up leaf01 swp49 swp

+ swp50 9216 1G up leaf01 swp50 swp

+ swp51 9216 1G up spine01 swp2 swp

+ swp52 9216 1G up spine02 swp2 swp

cumulus@leaf03:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

--------- ----- ----- ----- --------------- ----------------- -------- -----------------------------

+ eth0 1500 1G up oob-mgmt-switch swp12 eth IP Address: 192.168.200.13/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ swp1 9216 1G up esxi03 44:38:39:00:00:3e swp

+ swp49 9216 1G up leaf04 swp49 swp

+ swp50 9216 1G up leaf04 swp50 swp

+ swp51 9216 1G up spine01 swp3 swp

+ swp52 9216 1G up spine02 swp3 swp

cumulus@leaf04:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

--------- ----- ----- ----- --------------- ----------------- -------- -----------------------------

+ eth0 1500 1G up oob-mgmt-switch swp13 eth IP Address: 192.168.200.14/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ swp1 9216 1G up esxi03 44:38:39:00:00:44 swp

+ swp49 9216 1G up leaf03 swp49 swp

+ swp50 9216 1G up leaf03 swp50 swp

+ swp51 9216 1G up spine01 swp4 swp

+ swp52 9216 1G up spine02 swp4 swp

cumulus@spine01:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

--------- ----- ----- ----- --------------- ----------- -------- -----------------------------

+ eth0 1500 1G up oob-mgmt-switch swp14 eth IP Address: 192.168.200.21/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ swp1 9216 1G up leaf01 swp51 swp

+ swp2 9216 1G up leaf02 swp51 swp

+ swp3 9216 1G up leaf03 swp51 swp

+ swp4 9216 1G up leaf04 swp51 swp

cumulus@spine02:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

--------- ----- ----- ----- --------------- ----------- -------- ----------------------------

+ eth0 1500 1G up oob-mgmt-switch swp15 eth IP Address:192.168.200.22/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ swp1 9216 1G up leaf01 swp52 swp

+ swp2 9216 1G up leaf02 swp52 swp

+ swp3 9216 1G up leaf03 swp52 swp

+ swp4 9216 1G up leaf04 swp52 swp

纯虚拟化环境

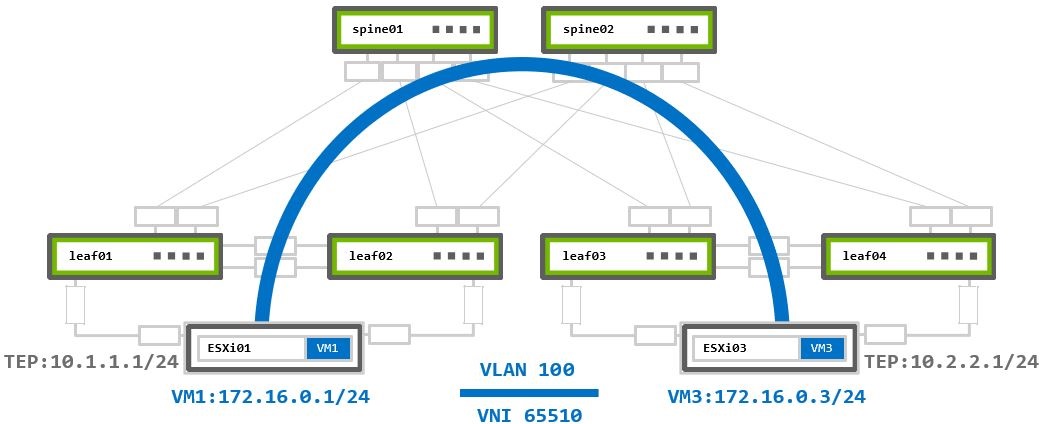

此用例涵盖基本的 VMware 环境 - 100% 基于纯 IP Fabric 底层的虚拟化。所有通信都在位于 ESXi hypervisor 上的虚拟机 (VM) 之间进行。

NSX-T 使用通用网络虚拟化封装 (Geneve) 作为 Overlay 协议,以在三层底层 Fabric 之上的二层隧道上传输虚拟化流量。Geneve 协议类似于众所周知的 VXLAN 封装,但它具有带有更多选项的扩展标头。您需要使用内核模块在每个 NSX-T准备好的主机(将 ESXi 添加到 NSX-T 管理器)上充当隧道端点 (TEP) 设备。TEP 设备负责封装和解封装虚拟化网络内部虚拟机之间的流量。

在参考拓扑中,VM 位于两个不同的物理 ESXi hypervisor 上。它们位于同一 IP 子网中,并连接到同一 VMware 逻辑交换机。由于三层底层网络将它们分隔开,因此 NSX Overlay 提供 VM 到 VM 的通信。

ESXi hypervisor 使用主动-主动 LAG 连接到架顶 (ToR)(或 Leaf)交换机,以实现冗余和额外的吞吐量。Cumulus Linux MLAG 和 VRR 配置支持此 ESXi 要求。

当使用两个交换机连接时,无论使用哪个 N-VDS 上行链路配置文件,都必须配置 MLAG 和 VRR。

MLAG 配置

配置 MLAG 参数和 peerlink 接口

cumulus@leaf01:mgmt:~$ nv set interface peerlink bond member swp49-50

cumulus@leaf01:mgmt:~$ nv set interface peerlink type bond

cumulus@leaf01:mgmt:~$ nv set mlag mac-address 44:38:39:FF:00:01

cumulus@leaf01:mgmt:~$ nv set mlag backup 192.168.200.12 vrf mgmt

cumulus@leaf01:mgmt:~$ nv set mlag peer-ip linklocal

cumulus@leaf01:mgmt:~$ nv set mlag priority 1000

cumulus@leaf01:mgmt:~$ nv config apply -y

cumulus@leaf01:mgmt:~$ nv config save

cumulus@leaf02:mgmt:~$ nv set interface peerlink bond member swp49-50

cumulus@leaf02:mgmt:~$ nv set interface peerlink type bond

cumulus@leaf02:mgmt:~$ nv set mlag mac-address 44:38:39:FF:00:01

cumulus@leaf02:mgmt:~$ nv set mlag backup 192.168.200.11 vrf mgmt

cumulus@leaf02:mgmt:~$ nv set mlag peer-ip linklocal

cumulus@leaf02:mgmt:~$ nv set mlag priority 2000

cumulus@leaf02:mgmt:~$ nv config apply -y

cumulus@leaf02:mgmt:~$ nv config save

cumulus@leaf03:mgmt:~$ nv set interface peerlink bond member swp49-50

cumulus@leaf03:mgmt:~$ nv set interface peerlink type bond

cumulus@leaf03:mgmt:~$ nv set mlag mac-address 44:38:39:FF:00:02

cumulus@leaf03:mgmt:~$ nv set mlag backup 192.168.200.14 vrf mgmt

cumulus@leaf03:mgmt:~$ nv set mlag peer-ip linklocal

cumulus@leaf03:mgmt:~$ nv set mlag priority 1000

cumulus@leaf03:mgmt:~$ nv config apply -y

cumulus@leaf03:mgmt:~$ nv config save

cumulus@leaf04:mgmt:~$ nv set interface peerlink bond member swp49-50

cumulus@leaf04:mgmt:~$ nv set interface peerlink type bond

cumulus@leaf04:mgmt:~$ nv set mlag mac-address 44:38:39:FF:00:02

cumulus@leaf04:mgmt:~$ nv set mlag backup 192.168.200.13 vrf mgmt

cumulus@leaf04:mgmt:~$ nv set mlag peer-ip linklocal

cumulus@leaf04:mgmt:~$ nv set mlag priority 2000

cumulus@leaf04:mgmt:~$ nv config apply -y

cumulus@leaf04:mgmt:~$ nv config save

N-VDS 主动-主动 LACP LAG 上行链路配置文件

如果您使用推荐的主动-主动 LAG (LACP) N-VDS 上行链路配置文件,则必须将 ESXi 的交换机下行链路接口绑定到 MLAG 端口(LACP Bond)。

然后,将 Bond 接口添加到默认网桥 br_default 并设置其 stp 和 lacp 参数。此操作还会自动将 MLAG Bond 设置为 Trunk 端口(VLAN 标记),并允许所有 VLAN。

有关如何将 VLAN 分配给 Trunk 端口的更多信息,请参阅 VLAN 感知网桥模式 和 传统网桥模式。

对于主动-备用(或主动-主动非 LAG)ESXi 连接,请勿配置 MLAG 端口,并且不要为 N-VDS 上的 Overlay 传输区域使用主动-主动 LACP LAG 上行链路配置文件。

请按照 N-VDS 非 LAG 上行链路配置文件 部分下的说明配置交换机端口。

cumulus@leaf01:mgmt:~$ nv set interface esxi01 bond member swp1

cumulus@leaf01:mgmt:~$ nv set interface esxi01 type bond

cumulus@leaf01:mgmt:~$ nv set interface esxi01 bond mode lacp

cumulus@leaf01:mgmt:~$ nv set interface esxi01 bond mlag id 1

cumulus@leaf01:mgmt:~$ nv set interface esxi01 bridge domain br_default

cumulus@leaf01:mgmt:~$ nv set interface esxi01 bridge domain br_default stp bpdu-guard on

cumulus@leaf01:mgmt:~$ nv set interface esxi01 bridge domain br_default stp admin-edge on

cumulus@leaf01:mgmt:~$ nv set interface esxi01 bond lacp-bypass on

cumulus@leaf01:mgmt:~$ nv config apply -y

cumulus@leaf01:mgmt:~$ nv config save

cumulus@leaf02:mgmt:~$ nv set interface esxi01 bond member swp1

cumulus@leaf02:mgmt:~$ nv set interface esxi01 type bond

cumulus@leaf02:mgmt:~$ nv set interface esxi01 bond mode lacp

cumulus@leaf02:mgmt:~$ nv set interface esxi01 bond mlag id 1

cumulus@leaf02:mgmt:~$ nv set interface esxi01 bridge domain br_default

cumulus@leaf02:mgmt:~$ nv set interface esxi01 bridge domain br_default stp bpdu-guard on

cumulus@leaf02:mgmt:~$ nv set interface esxi01 bridge domain br_default stp admin-edge on

cumulus@leaf02:mgmt:~$ nv set interface esxi01 bond lacp-bypass on

cumulus@leaf02:mgmt:~$ nv config apply -y

cumulus@leaf02:mgmt:~$ nv config save

cumulus@leaf03:mgmt:~$ nv set interface esxi03 bond member swp1

cumulus@leaf03:mgmt:~$ nv set interface esxi03 type bond

cumulus@leaf03:mgmt:~$ nv set interface esxi03 bond mode lacp

cumulus@leaf03:mgmt:~$ nv set interface esxi03 bond mlag id 1

cumulus@leaf03:mgmt:~$ nv set interface esxi03 bridge domain br_default

cumulus@leaf03:mgmt:~$ nv set interface esxi03 bridge domain br_default stp bpdu-guard on

cumulus@leaf03:mgmt:~$ nv set interface esxi03 bridge domain br_default stp admin-edge on

cumulus@leaf03:mgmt:~$ nv set interface esxi03 bond lacp-bypass on

cumulus@leaf03:mgmt:~$ nv config apply -y

cumulus@leaf03:mgmt:~$ nv config save

cumulus@leaf04:mgmt:~$ nv set interface esxi03 bond member swp1

cumulus@leaf04:mgmt:~$ nv set interface esxi03 type bond

cumulus@leaf04:mgmt:~$ nv set interface esxi03 bond mode lacp

cumulus@leaf04:mgmt:~$ nv set interface esxi03 bond mlag id 1

cumulus@leaf04:mgmt:~$ nv set interface esxi03 bridge domain br_default

cumulus@leaf04:mgmt:~$ nv set interface esxi03 bridge domain br_default stp bpdu-guard on

cumulus@leaf04:mgmt:~$ nv set interface esxi03 bridge domain br_default stp admin-edge on

cumulus@leaf04:mgmt:~$ nv set interface esxi03 bond lacp-bypass on

cumulus@leaf04:mgmt:~$ nv config apply -y

cumulus@leaf04:mgmt:~$ nv config save

N-VDS 非 LAG 上行链路配置文件

如果您使用主动-备用或主动-主动、非 LAG N-VDS 上行链路配置文件,则必须将 ESXi 的交换机下行链路接口配置为常规交换机端口;请勿使用任何 MLAG 端口配置。您必须将它们添加到默认网桥 br_default,它们将自动设置为 Trunk 端口。

cumulus@leaf01:mgmt:~$ nv set interface swp1 bridge domain br_default

cumulus@leaf01:mgmt:~$ nv config apply -y

cumulus@leaf01:mgmt:~$ nv config save

cumulus@leaf02:mgmt:~$ nv set interface swp1 bridge domain br_default

cumulus@leaf02:mgmt:~$ nv config apply -y

cumulus@leaf02:mgmt:~$ nv config save

cumulus@leaf03:mgmt:~$ nv set interface swp1 bridge domain br_default

cumulus@leaf03:mgmt:~$ nv config apply -y

cumulus@leaf03:mgmt:~$ nv config save

cumulus@leaf04:mgmt:~$ nv set interface swp1 bridge domain br_default

cumulus@leaf04:mgmt:~$ nv config apply -y

cumulus@leaf04:mgmt:~$ nv config save

MLAG 配置验证

使用 nv show mlag 命令验证 MLAG 配置,并使用 net show clag 或 clagctl 命令查看 MLAG 接口信息。在本示例中,esxi01 和 esxi03 是连接到 ESXi 主机的 MLAG Bond 接口。

cumulus@leaf01:mgmt:~$ nv show mlag

operational applied description

-------------- ----------------------- ----------------- ------------------------------------------------------

enable on Turn the feature 'on' or 'off'. The default is 'off'.

debug off Enable MLAG debugging

init-delay 100 The delay, in seconds, before bonds are brought up.

mac-address 44:38:39:ff:00:01 44:38:39:ff:00:01 Override anycast-mac and anycast-id

peer-ip fe80::4638:39ff:fe00:5a linklocal Peer Ip Address

priority 1000 2000 Mlag Priority

[backup] 192.168.200.12 192.168.200.12 Set of MLAG backups

backup-active False Mlag Backup Status

backup-reason Mlag Backup Reason

local-id 44:38:39:00:00:59 Mlag Local Unique Id

local-role primary Mlag Local Role

peer-alive True Mlag Peer Alive Status

peer-id 44:38:39:00:00:5a Mlag Peer Unique Id

peer-interface peerlink.4094 Mlag Peerlink Interface

peer-priority 2000 Mlag Peer Priority

peer-role secondary Mlag Peer Role

cumulus@leaf01:mgmt:~$ net show clag

The peer is alive

Our Priority, ID, and Role: 1000 44:38:39:00:00:59 primary

Peer Priority, ID, and Role: 2000 44:38:39:00:00:5a secondary

Peer Interface and IP: peerlink.4094 fe80::4638:39ff:fe00:5a (linklocal)

Backup IP: 192.168.200.12 vrf mgmt (active)

System MAC: 44:38:39:ff:00:01

CLAG Interfaces

Our Interface Peer Interface CLAG Id Conflicts Proto-Down Reason

---------------- ---------------- ------- -------------------- -----------------

esxi01 esxi01 1 - -

cumulus@leaf02:mgmt:~$ nv show mlag

operational applied description

-------------- ----------------------- ----------------- ------------------------------------------------------

enable on Turn the feature 'on' or 'off'. The default is 'off'.

debug off Enable MLAG debugging

init-delay 100 The delay, in seconds, before bonds are brought up.

mac-address 44:38:39:ff:00:01 44:38:39:ff:00:01 Override anycast-mac and anycast-id

peer-ip fe80::4638:39ff:fe00:59 linklocal Peer Ip Address

priority 2000 1000 Mlag Priority

[backup] 192.168.200.11 192.168.200.11 Set of MLAG backups

backup-active False Mlag Backup Status

backup-reason Mlag Backup Reason

local-id 44:38:39:00:00:5a Mlag Local Unique Id

local-role secondary Mlag Local Role

peer-alive True Mlag Peer Alive Status

peer-id 44:38:39:00:00:59 Mlag Peer Unique Id

peer-interface peerlink.4094 Mlag Peerlink Interface

peer-priority 1000 Mlag Peer Priority

peer-role primary Mlag Peer Role

cumulus@leaf02:mgmt:~$ net show clag

The peer is alive

Our Priority, ID, and Role: 2000 44:38:39:00:00:5a secondary

Peer Priority, ID, and Role: 1000 44:38:39:00:00:59 primary

Peer Interface and IP: peerlink.4094 fe80::4638:39ff:fe00:59 (linklocal)

Backup IP: 192.168.200.11 vrf mgmt (active)

System MAC: 44:38:39:ff:00:01

CLAG Interfaces

Our Interface Peer Interface CLAG Id Conflicts Proto-Down Reason

---------------- ---------------- ------- -------------------- -----------------

esxi01 esxi01 1 - -

cumulus@leaf03:mgmt:~$ nv show mlag

operational applied description

-------------- ----------------------- ----------------- ------------------------------------------------------

enable on Turn the feature 'on' or 'off'. The default is 'off'.

debug off Enable MLAG debugging

init-delay 100 The delay, in seconds, before bonds are brought up.

mac-address 44:38:39:ff:00:02 44:38:39:ff:00:02 Override anycast-mac and anycast-id

peer-ip fe80::4638:39ff:fe00:5e linklocal Peer Ip Address

priority 1000 2000 Mlag Priority

[backup] 192.168.200.14 192.168.200.14 Set of MLAG backups

backup-active False Mlag Backup Status

backup-reason Mlag Backup Reason

local-id 44:38:39:00:00:5d Mlag Local Unique Id

local-role primary Mlag Local Role

peer-alive True Mlag Peer Alive Status

peer-id 44:38:39:00:00:5e Mlag Peer Unique Id

peer-interface peerlink.4094 Mlag Peerlink Interface

peer-priority 2000 Mlag Peer Priority

peer-role secondary Mlag Peer Role

cumulus@leaf03:mgmt:~$ net show clag

The peer is alive

Our Priority, ID, and Role: 1000 44:38:39:00:00:5d primary

Peer Priority, ID, and Role: 2000 44:38:39:00:00:5e secondary

Peer Interface and IP: peerlink.4094 fe80::4638:39ff:fe00:5e (linklocal)

Backup IP: 192.168.200.14 vrf mgmt (active)

System MAC: 44:38:39:ff:00:02

CLAG Interfaces

Our Interface Peer Interface CLAG Id Conflicts Proto-Down Reason

---------------- ---------------- ------- -------------------- -----------------

esxi03 esxi03 1 - -

cumulus@leaf04:mgmt:~$ nv show mlag

operational applied description

-------------- ----------------------- ----------------- ------------------------------------------------------

enable on Turn the feature 'on' or 'off'. The default is 'off'.

debug off Enable MLAG debugging

init-delay 100 The delay, in seconds, before bonds are brought up.

mac-address 44:38:39:ff:00:02 44:38:39:ff:00:02 Override anycast-mac and anycast-id

peer-ip fe80::4638:39ff:fe00:5e linklocal Peer Ip Address

priority 2000 1000 Mlag Priority

[backup] 192.168.200.13 192.168.200.13 Set of MLAG backups

backup-active False Mlag Backup Status

backup-reason Mlag Backup Reason

local-id 44:38:39:00:00:5e Mlag Local Unique Id

local-role secondary Mlag Local Role

peer-alive True Mlag Peer Alive Status

peer-id 44:38:39:00:00:5d Mlag Peer Unique Id

peer-interface peerlink.4094 Mlag Peerlink Interface

peer-priority 1000 Mlag Peer Priority

peer-role primary Mlag Peer Role

cumulus@leaf04:mgmt:~$ net show clag

The peer is alive

Our Priority, ID, and Role: 2000 44:38:39:00:00:5e secondary

Peer Priority, ID, and Role: 1000 44:38:39:00:00:5d primary

Peer Interface and IP: peerlink.4094 fe80::4638:39ff:fe00:5d (linklocal)

Backup IP: 192.168.200.13 vrf mgmt (active)

System MAC: 44:38:39:ff:00:02

CLAG Interfaces

Our Interface Peer Interface CLAG Id Conflicts Proto-Down Reason

---------------- ---------------- ------- -------------------- -----------------

esxi03 esxi03 1 - -

在非 LAG N-VDS 上行链路配置文件场景中,MLAG 端口不会显示在 show 输出中。缺少 MLAG 端口并不意味着 MLAG 不起作用。

MLAG 对于使用 VRR 是强制性的 — 也称为增强型 VRRP,非 LAG 上行链路配置文件也需要它。

VRR 配置

您可以将 ESXi TEP IP 地址设置在相同或不同的子网上。TEP IP 池的 VMware 最佳实践配置是为每个物理机架分配不同的子网,以简化操作。

ToR 交换机上的 VRR 为 ESXi 服务器提供冗余、主动-主动 TEP 子网的默认网关。

VMware 要求每种类型的流量使用一个 VLAN,例如,存储、vSAN、vMotion 或 Overlay (TEP) 流量。因此,必须为每种流量配置 SVI 和 VRR 实例。在本示例中,我们仅显示 Overlay VM 到 VM 的通信,因此交换机配置中仅显示 TEP VLAN 和 SVI/VRR。

由于本指南仅展示如何处理虚拟化流量,因此所有配置示例均基于 default VRF。在许多情况下,不同的 VMware 网络(流量类型)将由不同的 VRF 分隔(例如,vrfX 中的 Mgmt 网络和 vrfY 中的 TEP 网络)。在这种情况下,您应该为每个网络创建自定义 VRF,将 SVI 插入其中,并进行所需的 BGP 底层网络配置。

有关更多信息,请查看 虚拟路由和转发 - VRF 文档及其 BGP 部分。

VLAN ID 是本地参数,不在 hypervisor 之间共享。为了部署简单起见,请为跨机架使用的所有 TEP 设备使用相同的 VLAN ID。我们的部署使用 VLAN 100。

cumulus@leaf01:mgmt:~$ nv set bridge domain br_default vlan 100

cumulus@leaf01:mgmt:~$ nv set interface vlan100 ip address 10.1.1.252/24

cumulus@leaf01:mgmt:~$ nv set interface vlan100 ip vrr address 10.1.1.254/24

cumulus@leaf01:mgmt:~$ nv set interface vlan100 ip vrr mac-address 00:00:00:00:01:00

cumulus@leaf01:mgmt:~$ nv set interface vlan100 ip vrr state up

cumulus@leaf01:mgmt:~$ nv config apply -y

cumulus@leaf01:mgmt:~$ nv config save

cumulus@leaf02:mgmt:~$ nv set bridge domain br_default vlan 100

cumulus@leaf02:mgmt:~$ nv set interface vlan100 ip address 10.1.1.253/24

cumulus@leaf02:mgmt:~$ nv set interface vlan100 ip vrr address 10.1.1.254/24

cumulus@leaf02:mgmt:~$ nv set interface vlan100 ip vrr mac-address 00:00:00:00:01:00

cumulus@leaf02:mgmt:~$ nv set interface vlan100 ip vrr state up

cumulus@leaf02:mgmt:~$ nv config apply -y

cumulus@leaf02:mgmt:~$ nv config save

cumulus@leaf03:mgmt:~$ nv set bridge domain br_default vlan 100

cumulus@leaf03:mgmt:~$ nv set interface vlan100 ip address 10.2.2.252/24

cumulus@leaf03:mgmt:~$ nv set interface vlan100 ip vrr address 10.2.2.254/24

cumulus@leaf03:mgmt:~$ nv set interface vlan100 ip vrr mac-address 00:00:00:01:00:00

cumulus@leaf03:mgmt:~$ nv set interface vlan100 ip vrr state up

cumulus@leaf03:mgmt:~$ nv config apply -y

cumulus@leaf03:mgmt:~$ nv config save

cumulus@leaf04:mgmt:~$ nv set bridge domain br_default vlan 100

cumulus@leaf04:mgmt:~$ nv set interface vlan100 ip address 10.2.2.253/24

cumulus@leaf04:mgmt:~$ nv set interface vlan100 ip vrr address 10.2.2.254/24

cumulus@leaf04:mgmt:~$ nv set interface vlan100 ip vrr mac-address 00:00:00:01:00:00

cumulus@leaf04:mgmt:~$ nv set interface vlan100 ip vrr state up

cumulus@leaf04:mgmt:~$ nv config apply -y

cumulus@leaf04:mgmt:~$ nv config save

VRR 配置验证

使用 nv show interface 命令检查 SVI 和 VRR 的状态。在此输出中,SVI 和 VRR 接口显示为 vlanXXX 和 vlanXXX-v0。

cumulus@leaf01:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

---------------- ----- ----- ----- --------------- ----------------- -------- -----------------------------

+ esxi01 9216 1G up bond

+ eth0 1500 1G up oob-mgmt-switch swp10 eth IP Address: 192.168.200.11/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ peerlink 9216 2G up bond

+ peerlink.4094 9216 up sub

+ swp1 9216 1G up esxi01 44:38:39:00:00:32 swp

+ swp49 9216 1G up leaf02 swp49 swp

+ swp50 9216 1G up leaf02 swp50 swp

+ swp51 9216 1G up spine01 swp1 swp

+ swp52 9216 1G up spine02 swp1 swp

+ vlan100 9216 up svi IP Address: 10.1.1.252/24

+ vlan100-v0 9216 up svi IP Address: 10.1.1.254/24

cumulus@leaf02:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

---------------- ----- ----- ----- --------------- ----------------- -------- -----------------------------

+ esxi01 9216 1G up bond

+ eth0 1500 1G up oob-mgmt-switch swp10 eth IP Address: 192.168.200.12/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ peerlink 9216 2G up bond

+ peerlink.4094 9216 up sub

+ swp1 9216 1G up esxi01 44:38:39:00:00:38 swp

+ swp49 9216 1G up leaf01 swp49 swp

+ swp50 9216 1G up leaf01 swp50 swp

+ swp51 9216 1G up spine01 swp2 swp

+ swp52 9216 1G up spine02 swp2 swp

+ vlan100 9216 up svi IP Address: 10.1.1.253/24

+ vlan100-v0 9216 up svi IP Address: 10.1.1.254/24

cumulus@leaf03:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

---------------- ----- ----- ----- --------------- ----------------- -------- -----------------------------

+ esxi03 9216 1G up bond

+ eth0 1500 1G up oob-mgmt-switch swp12 eth IP Address: 192.168.200.13/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ peerlink 9216 2G up bond

+ peerlink.4094 9216 up sub

+ swp1 9216 1G up esxi03 44:38:39:00:00:3e swp

+ swp49 9216 1G up leaf04 swp49 swp

+ swp50 9216 1G up leaf04 swp50 swp

+ swp51 9216 1G up spine01 swp3 swp

+ swp52 9216 1G up spine02 swp3 swp

+ vlan100 9216 up svi IP Address: 10.2.2.252/24

+ vlan100-v0 9216 up svi IP Address: 10.2.2.254/24

cumulus@leaf04:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

---------------- ----- ----- ----- --------------- ----------------- -------- -----------------------------

+ esxi03 9216 1G up bond

+ eth0 1500 1G up oob-mgmt-switch swp13 eth IP Address: 192.168.200.14/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ peerlink 9216 2G up bond

+ peerlink.4094 9216 up sub

+ swp1 9216 1G up esxi03 44:38:39:00:00:44 swp

+ swp49 9216 1G up leaf03 swp49 swp

+ swp50 9216 1G up leaf03 swp50 swp

+ swp51 9216 1G up spine01 swp4 swp

+ swp52 9216 1G up spine02 swp4 swp

+ vlan100 9216 up svi IP Address: 10.2.2.253/24

+ vlan100-v0 9216 up svi IP Address: 10.2.2.254/24

BGP 底层网络配置

本指南中的所有底层 IP Fabric BGP 对等互连都使用 eBGP 作为配置的基础。为了简单易用的 BGP 配置,请使用 Cumulus Linux Auto BGP 和 BGP Unnumbered 配置。

Auto BGP leaf 或 spine 关键字仅用于配置 ASN。配置文件和 nv show 命令显示 AS 号。

Auto BGP 配置仅在使用 NVUE 时可用。如果您想使用 vtysh 配置,则必须配置 BGP ASN。

有关更多详细信息,请参阅 配置 FRRouting 文档。

cumulus@leaf01:mgmt:~$ nv set router bgp enable on

cumulus@leaf01:mgmt:~$ nv set vrf default router bgp autonomous-system leaf

cumulus@leaf01:mgmt:~$ nv set vrf default router bgp neighbor peerlink.4094 remote-as external

cumulus@leaf01:mgmt:~$ nv set vrf default router bgp neighbor swp51 remote-as external

cumulus@leaf01:mgmt:~$ nv set vrf default router bgp neighbor swp52 remote-as external

cumulus@leaf01:mgmt:~$ nv set vrf default router bgp router-id 10.10.10.1

cumulus@leaf01:mgmt:~$ nv config apply -y

cumulus@leaf01:mgmt:~$ nv config save

cumulus@leaf02:mgmt:~$ nv set router bgp enable on

cumulus@leaf02:mgmt:~$ nv set vrf default router bgp autonomous-system leaf

cumulus@leaf02:mgmt:~$ nv set vrf default router bgp neighbor peerlink.4094 remote-as external

cumulus@leaf02:mgmt:~$ nv set vrf default router bgp neighbor swp51 remote-as external

cumulus@leaf02:mgmt:~$ nv set vrf default router bgp neighbor swp52 remote-as external

cumulus@leaf02:mgmt:~$ nv set vrf default router bgp router-id 10.10.10.2

cumulus@leaf02:mgmt:~$ nv config apply -y

cumulus@leaf02:mgmt:~$ nv config save

cumulus@leaf03:mgmt:~$ nv set router bgp enable on

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp autonomous-system leaf

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp neighbor peerlink.4094 remote-as external

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp neighbor swp51 remote-as external

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp neighbor swp52 remote-as external

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp router-id 10.10.10.3

cumulus@leaf03:mgmt:~$ nv config apply -y

cumulus@leaf03:mgmt:~$ nv config save

cumulus@leaf04:mgmt:~$ nv set router bgp enable on

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp autonomous-system leaf

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp neighbor peerlink.4094 remote-as external

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp neighbor swp51 remote-as external

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp neighbor swp52 remote-as external

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp router-id 10.10.10.4

cumulus@leaf04:mgmt:~$ nv config apply -y

cumulus@leaf04:mgmt:~$ nv config save

cumulus@spine01:mgmt:~$ nv set router bgp enable on

cumulus@spine01:mgmt:~$ nv set vrf default router bgp autonomous-system spine

cumulus@spine01:mgmt:~$ nv set vrf default router bgp neighbor swp1 remote-as external

cumulus@spine01:mgmt:~$ nv set vrf default router bgp neighbor swp2 remote-as external

cumulus@spine01:mgmt:~$ nv set vrf default router bgp neighbor swp3 remote-as external

cumulus@spine01:mgmt:~$ nv set vrf default router bgp neighbor swp4 remote-as external

cumulus@spine01:mgmt:~$ nv set vrf default router bgp path-selection multipath aspath-ignore on

cumulus@spine01:mgmt:~$ nv set vrf default router bgp router-id 10.10.10.101

cumulus@spine01:mgmt:~$ nv config apply -y

cumulus@spine01:mgmt:~$ nv config save

cumulus@spine02:mgmt:~$ nv set router bgp enable on

cumulus@spine02:mgmt:~$ nv set vrf default router bgp autonomous-system spine

cumulus@spine02:mgmt:~$ nv set vrf default router bgp neighbor swp1 remote-as external

cumulus@spine02:mgmt:~$ nv set vrf default router bgp neighbor swp2 remote-as external

cumulus@spine02:mgmt:~$ nv set vrf default router bgp neighbor swp3 remote-as external

cumulus@spine02:mgmt:~$ nv set vrf default router bgp neighbor swp4 remote-as external

cumulus@spine02:mgmt:~$ nv set vrf default router bgp path-selection multipath aspath-ignore on

cumulus@spine02:mgmt:~$ nv set vrf default router bgp router-id 10.10.10.102

cumulus@spine02:mgmt:~$ nv config apply -y

cumulus@spine02:mgmt:~$ nv config save

cumulus@leaf01:~$ sudo vtysh

leaf01# configure terminal

leaf01(config)# router bgp 65101

leaf01(config-router)# bgp router-id 10.10.10.1

leaf01(config-router)# neighbor peerlink.4094 interface remote-as external

leaf01(config-router)# neighbor swp51 remote-as external

leaf01(config-router)# neighbor swp52 remote-as external

leaf01(config-router)# end

leaf01# write memory

leaf01# exit

cumulus@leaf03:~$ sudo vtysh

leaf02# configure terminal

leaf02(config)# router bgp 65102

leaf02(config-router)# bgp router-id 10.10.10.2

leaf02(config-router)# neighbor peerlink.4094 interface remote-as external

leaf02(config-router)# neighbor swp51 remote-as external

leaf02(config-router)# neighbor swp52 remote-as external

leaf02(config-router)# end

leaf02# write memory

leaf02# exit

cumulus@leaf03:~$ sudo vtysh

leaf03# configure terminal

leaf03(config)# router bgp 65103

leaf03(config-router)# bgp router-id 10.10.10.3

leaf03(config-router)# neighbor peerlink.4094 interface remote-as external

leaf03(config-router)# neighbor swp51 remote-as external

leaf03(config-router)# neighbor swp52 remote-as external

leaf03(config-router)# end

leaf03# write memory

leaf03# exit

cumulus@leaf04:~$ sudo vtysh

leaf04# configure terminal

leaf04(config)# router bgp 65104

leaf04(config-router)# bgp router-id 10.10.10.4

leaf04(config-router)# neighbor peerlink.4094 interface remote-as external

leaf04(config-router)# neighbor swp51 remote-as external

leaf04(config-router)# neighbor swp52 remote-as external

leaf04(config-router)# end

leaf04# write memory

leaf04# exit

cumulus@spine01:~$ sudo vtysh

spine01# configure terminal

spine01(config)# router bgp 65199

spine01(config-router)# bgp router-id 10.10.10.101

spine01(config-router)# neighbor swp1 remote-as external

spine01(config-router)# neighbor swp2 remote-as external

spine01(config-router)# neighbor swp3 remote-as external

spine01(config-router)# neighbor swp4 remote-as external

spine01(config-router)# bgp bestpath as-path multipath-relax

spine01(config-router)# end

spine01# write memory

spine01# exit

cumulus@spine02:~$ sudo vtysh

spine02# configure terminal

spine02(config)# router bgp 65199

spine02(config-router)# bgp router-id 10.10.10.102

spine02(config-router)# neighbor swp1 remote-as external

spine02(config-router)# neighbor swp2 remote-as external

spine02(config-router)# neighbor swp3 remote-as external

spine02(config-router)# neighbor swp4 remote-as external

spine02(config-router)# bgp bestpath as-path multipath-relax

spine02(config-router)# end

spine02# write memory

spine02# exit

TEP VLAN 子网通告到 BGP

ESXi hypervisor 构建二层 Overlay 隧道,以通过三层底层 Fabric 发送 Geneve 封装的流量。底层 IP Fabric 必须知道网络中的每个 TEP 设备地址。将您之前创建的本地 Overlay TEP VLAN(TEP 子网)通告到 BGP 可以实现此目的。

使用 redistribute connected 命令将直接连接的路由注入到 BGP 中。您还可以将此命令与过滤结合使用,以防止将不需要的路由通告到 BGP 中。有关更多信息,请参阅 路由过滤和重分发 文档。

cumulus@leaf01:mgmt:~$ nv set vrf default router bgp address-family ipv4-unicast enable on

cumulus@leaf01:mgmt:~$ nv set vrf default router bgp address-family ipv4-unicast redistribute connected enable on

cumulus@leaf01:mgmt:~$ nv config apply -y

cumulus@leaf01:mgmt:~$ nv config save

cumulus@leaf02:mgmt:~$ nv set vrf default router bgp address-family ipv4-unicast enable on

cumulus@leaf02:mgmt:~$ nv set vrf default router bgp address-family ipv4-unicast redistribute connected enable on

cumulus@leaf02:mgmt:~$ nv config apply -y

cumulus@leaf02:mgmt:~$ nv config save

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp address-family ipv4-unicast enable on

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp address-family ipv4-unicast redistribute connected enable on

cumulus@leaf03:mgmt:~$ nv config apply -y

cumulus@leaf03:mgmt:~$ nv config save

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp address-family ipv4-unicast enable on

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp address-family ipv4-unicast redistribute connected enable on

cumulus@leaf04:mgmt:~$ nv config apply -y

cumulus@leaf04:mgmt:~$ nv config save

cumulus@leaf01:~$ sudo vtysh

leaf01# configure terminal

leaf01(config)# router bgp

leaf01(config-router)# address-family ipv4 unicast

leaf01(config-router-af)# redistribute connected

leaf01(config-router)# end

leaf01# write memory

leaf01# exit

cumulus@leaf02:~$ sudo vtysh

leaf02# configure terminal

leaf02(config)# router bgp

leaf02(config-router)# address-family ipv4 unicast

leaf02(config-router-af)# redistribute connected

leaf02(config-router)# end

leaf02# write memory

leaf02# exit

cumulus@leaf03:~$ sudo vtysh

leaf03# configure terminal

leaf03(config)# router bgp

leaf03(config-router)# address-family ipv4 unicast

leaf03(config-router-af)# redistribute connected

leaf03(config-router)# end

leaf03# write memory

leaf03# exit

cumulus@leaf04:~$ sudo vtysh

leaf04# configure terminal

leaf04(config)# router bgp

leaf04(config-router)# address-family ipv4 unicast

leaf04(config-router-af)# redistribute connected

leaf04(config-router)# end

leaf04# write memory

leaf04# exit

BGP 对等互连和路由通告验证

在 NVUE 中使用 net show bgp summary 命令,或在 vtysh 中使用 show ip bgp summary 命令来验证 BGP 对等互连状态和信息。

cumulus@leaf01:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.1, local AS number 4259632651 vrf-id 0

BGP table version 2

RIB entries 3, using 576 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf02(peerlink.4094) 4 4259632649 367 366 0 0 0 00:18:05 2 2

spine01(swp51) 4 4200000000 366 364 0 0 0 00:17:59 1 2

spine02(swp52) 4 4200000000 368 366 0 0 0 00:18:04 1 2

Total number of neighbors 3

cumulus@leaf02:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.2, local AS number 4259632649 vrf-id 0

BGP table version 3

RIB entries 3, using 576 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf01(peerlink.4094) 4 4259632651 410 412 0 0 0 00:20:16 2 2

spine01(swp51) 4 4200000000 413 412 0 0 0 00:20:19 2 2

spine02(swp52) 4 4200000000 411 410 0 0 0 00:20:13 2 2

Total number of neighbors 3

cumulus@leaf03:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.3, local AS number 4259632661 vrf-id 0

BGP table version 2

RIB entries 3, using 576 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf04(peerlink.4094) 4 4259632667 418 417 0 0 0 00:20:38 2 2

spine01(swp51) 4 4200000000 419 417 0 0 0 00:20:37 1 2

spine02(swp52) 4 4200000000 417 415 0 0 0 00:20:32 1 2

Total number of neighbors 3

cumulus@leaf04:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.4, local AS number 4259632667 vrf-id 0

BGP table version 3

RIB entries 3, using 576 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf03(peerlink.4094) 4 4259632661 448 449 0 0 0 00:22:10 2 2

spine01(swp51) 4 4200000000 449 448 0 0 0 00:22:09 2 2

spine02(swp52) 4 4200000000 447 446 0 0 0 00:22:03 2 2

Total number of neighbors 3

cumulus@spine01:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.101, local AS number 4200000000 vrf-id 0

BGP table version 27

RIB entries 3, using 576 bytes of memory

Peers 4, using 85 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf01(swp1) 4 4259632651 46052 46073 0 0 0 00:22:34 1 2

leaf02(swp2) 4 4259632649 46061 46091 0 0 0 00:22:43 1 2

leaf03(swp3) 4 4259632661 46125 46147 0 0 0 00:22:33 1 2

leaf04(swp4) 4 4259632667 46133 46168 0 0 0 00:22:33 1 2

Total number of neighbors 4

cumulus@spine02:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.102, local AS number 4200000000 vrf-id 0

BGP table version 21

RIB entries 3, using 576 bytes of memory

Peers 4, using 85 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf01(swp1) 4 4259632651 46047 46061 0 0 0 00:23:04 1 2

leaf02(swp2) 4 4259632649 46049 46073 0 0 0 00:23:03 1 2

leaf03(swp3) 4 4259632661 46112 46158 0 0 0 00:22:53 1 2

leaf04(swp4) 4 4259632667 46111 46149 0 0 0 00:22:53 1 2

Total number of neighbors 4

建立所有 BGP 对等互连后,每个重新分发的本地 TEP 子网都会出现在每个交换机的路由表中。在 NVUE 中使用 net show route 命令,或在 vtysh 中使用 show ip route 命令来检查路由表。

Leaf01 有两条 ECMP 路由(通过两个 Spine 交换机)到 BGP 接收的 ESXi03 TEP 子网 - 10.2.2.0/24

cumulus@leaf01:mgmt:~$ net show route

show ip route

=============

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

t - trapped, o - offload failure

C * 10.1.1.0/24 [0/1024] is directly connected, vlan100-v0, 00:28:07

C>* 10.1.1.0/24 is directly connected, vlan100, 00:28:07

B>* 10.2.2.0/24 [20/0] via fe80::4638:39ff:fe00:1, swp51, weight 1, 00:10:13

* via fe80::4638:39ff:fe00:3, swp52, weight 1, 00:10:13

Leaf02 有两条 ECMP 路由(通过两个 Spine 交换机)到 BGP 接收的 ESXi03 TEP 子网 - 10.2.2.0/24

cumulus@leaf02:mgmt:~$ net show route

show ip route

=============

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

t - trapped, o - offload failure

C * 10.1.1.0/24 [0/1024] is directly connected, vlan100-v0, 00:28:17

C>* 10.1.1.0/24 is directly connected, vlan100, 00:28:17

B>* 10.2.2.0/24 [20/0] via fe80::4638:39ff:fe00:9, swp51, weight 1, 00:10:19

* via fe80::4638:39ff:fe00:b, swp52, weight 1, 00:10:19

Leaf03 有两条 ECMP 路由(通过两个 Spine 交换机)到 BGP 接收的 ESXi01 TEP 子网 - 10.1.1.0/24

cumulus@leaf03:mgmt:~$ net show route

show ip route

=============

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

t - trapped, o - offload failure

B>* 10.1.1.0/24 [20/0] via fe80::4638:39ff:fe00:11, swp51, weight 1, 00:10:27

* via fe80::4638:39ff:fe00:13, swp52, weight 1, 00:10:27

C * 10.2.2.0/24 [0/1024] is directly connected, vlan100-v0, 00:28:11

C>* 10.2.2.0/24 is directly connected, vlan100, 00:28:11

Leaf04 有两条 ECMP 路由(通过两个 Spine 交换机)到 BGP 接收的 ESXi01 TEP 子网 - 10.1.1.0/24

cumulus@leaf04:mgmt:~$ net show route

show ip route

=============

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

t - trapped, o - offload failure

B>* 10.1.1.0/24 [20/0] via fe80::4638:39ff:fe00:19, swp51, weight 1, 00:10:30

* via fe80::4638:39ff:fe00:1b, swp52, weight 1, 00:10:30

C * 10.2.2.0/24 [0/1024] is directly connected, vlan100-v0, 00:28:11

C>* 10.2.2.0/24 is directly connected, vlan100, 00:28:11

Spine01 有两条 ECMP 路由到每个 ESXi hypervisor 的 TEP 子网,由 BGP 接收 - 10.1.1.0/24 和 10.2.2.0/24

cumulus@spine01:mgmt:~$ net show route

show ip route

=============

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

t - trapped, o - offload failure

B>* 10.1.1.0/24 [20/0] via fe80::4638:39ff:fe00:2, swp1, weight 1, 00:07:44

* via fe80::4638:39ff:fe00:a, swp2, weight 1, 00:07:44

B>* 10.2.2.0/24 [20/0] via fe80::4638:39ff:fe00:12, swp3, weight 1, 00:07:39

* via fe80::4638:39ff:fe00:1a, swp4, weight 1, 00:07:39

Spine02 有两条 ECMP 路由到每个 ESXi hypervisor 的 TEP 子网,由 BGP 接收 - 10.1.1.0/24 和 10.2.2.0/24

cumulus@spine02:mgmt:~$ net show route

show ip route

=============

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

t - trapped, o - offload failure

B>* 10.1.1.0/24 [20/0] via fe80::4638:39ff:fe00:4, swp1, weight 1, 00:09:28

* via fe80::4638:39ff:fe00:c, swp2, weight 1, 00:09:28

B>* 10.2.2.0/24 [20/0] via fe80::4638:39ff:fe00:14, swp3, weight 1, 00:09:24

* via fe80::4638:39ff:fe00:1c, swp4, weight 1, 00:09:24

流量流

ESXi hypervisor 可以访问每个 TEP 地址并为 Overlay VM 到 VM 的流量构建 Geneve 隧道。

本节描述了两个流量流示例

二层虚拟化流量

ESXi 将两个 VM 分配给同一 VMware 逻辑网段,并将它们放置在同一子网中。每个网段都有其自己唯一的虚拟网络标识符 (VNI),由 NSX-T 分配。它将此 VNI 添加到源 TEP 上的 Geneve 数据包标头中。目标 TEP 根据此 VNI 识别流量所属的网段。共享同一 Overlay 传输区域的所有网段都使用相同的 TEP 地址来建立隧道。N-VDS 上可以有多个 Overlay TZ,但在这种情况下,您需要配置更多 TEP VLAN 并在底层交换机上通告它们。此场景仅使用一个 Overlay TZ(一个 TEP VLAN)。

ESXi01 上的 VM1 172.16.0.1 向 ESXi03 上的 VM3 172.16.0.3 发送流量

- 数据包到达本地 hypervisor 的 TEP 设备

10.1.1.1。 - 本地 TEP 设备将其封装到新的 Geneve 数据包中,并插入分配给网段的 VNI

65510。

新的封装数据包的源 IP 地址是本地 TEP IP10.1.1.1,目标 IP 地址是远程 TEP 设备10.2.2.1。 - 封装的数据包通过底层网络路由到远程 TEP 设备。

- 远程 TEP 设备 (ESXi03) 接收并解封装 Geneve 封装的数据包。

- 基于 Geneve 标头内的 VNI,流量被转发到目标 VM3。

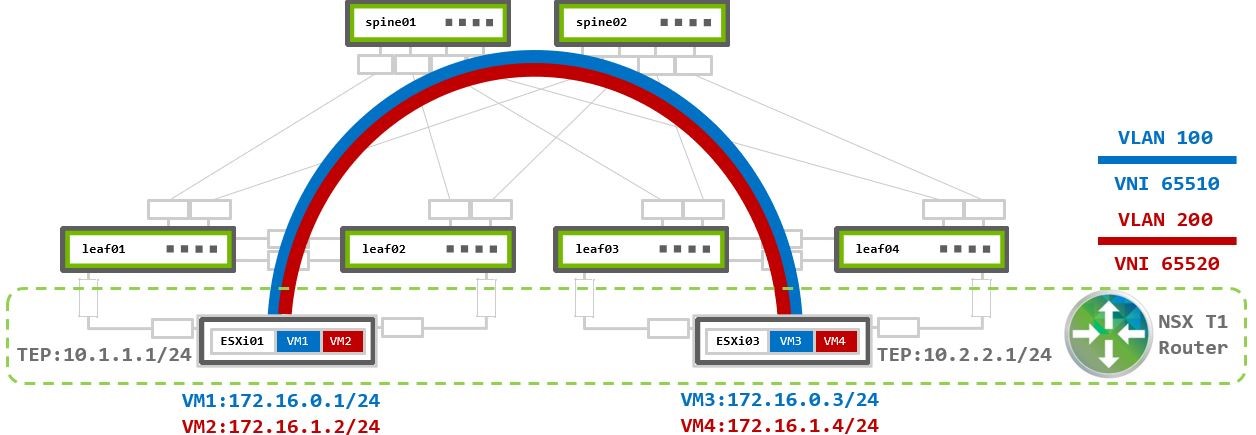

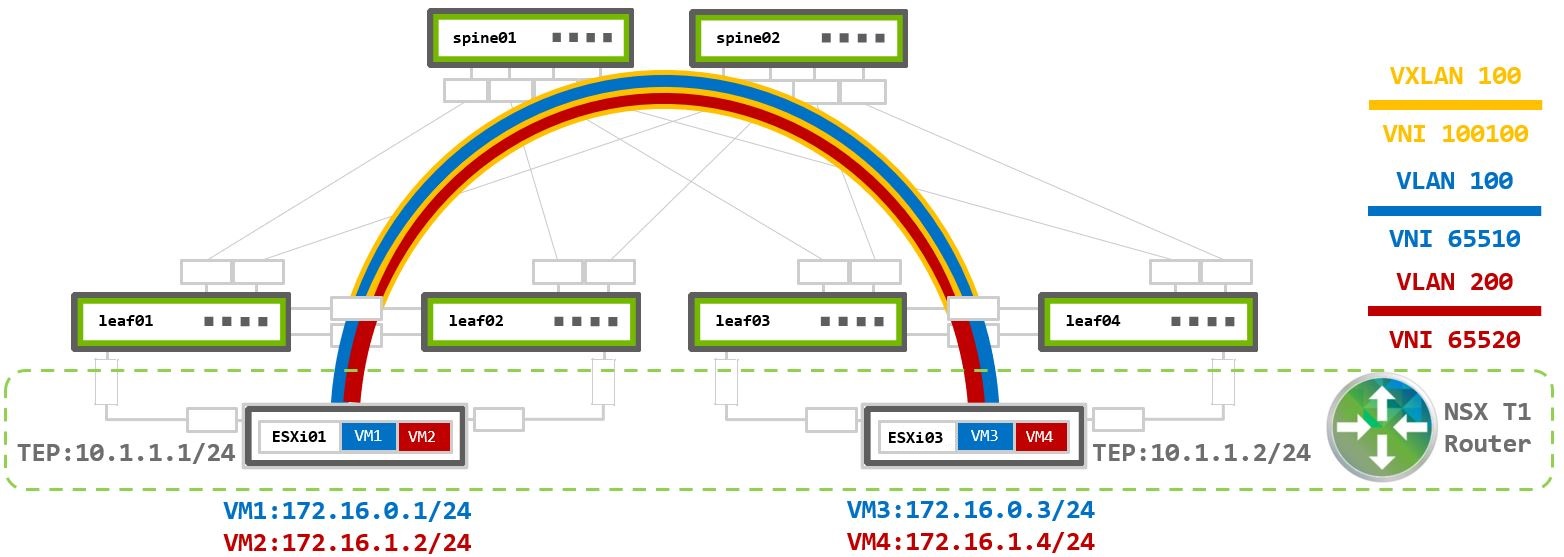

三层虚拟化流量

此场景检查了两个网段(逻辑交换机),每个网段分配了两个 VM。为每个网段分配了唯一的 VNI。对于网段之间的通信,或 VMware 所谓的“东西向流量”,流量必须使用 Tier 1 网关(T1 路由器)进行路由。T1 路由器是一个逻辑分布式路由器,存在于每个 ESXi hypervisor 中,并连接到每个逻辑网段。它是网段的默认网关,并在不同网段之间路由流量。

VMware 环境中的路由始终尽可能靠近源完成。

VM1 和 VM3 在 VLAN100 172.16.0.0/24 - VNI 65510 中。

VM2 和 VM4 在 VLAN200 172.16.1.0/24 - VNI 65520 中。

两个网段都分配给同一 Overlay TZ,该 Overlay TZ 使用相同的 TEP VLAN(TEP IP 为 10.1.1.0 和 10.2.2.1)以在物理 ESXi01 和 ESX03 hypervisor 之间建立 Overlay Geneve 隧道。交换机上无需额外配置。

同一网段内的流量处理方式与 二层虚拟化流量 场景相同。

多个 T1 路由器可用于跨网段进行负载均衡。然后,这些路由器使用 Tier-0 网关(T0 路由器)连接。T1 到 T0 路由器的模型在虚拟和裸机服务器环境的 流量流 部分中描述。

ESXi01 上的 VM1 172.16.0.1 向 ESXi03 上的 VM4 172.16.1.4 发送流量

- 在 ESXi01 内部,路由数据包到达本地 T1 路由器。

- T1 路由器检查其路由表以确定目标路径。

- 由于

VLAN200网段也连接到同一 T1 路由器,因此数据包被路由到目标 VLAN200 网段。 - 然后,本地 TEP 使用

VLAN200网段的 VNI65520将数据包封装到 Geneve 中。Geneve 数据包的源 IP 地址和目标 IP 地址是 TEP 设备10.1.1.1和10.2.2.1)。 - 封装的数据包通过基于底层 IP Fabric BGP 路由的 Geneve Overlay 隧道发送到远程 TEP 设备。

- 远程 TEP 设备 (ESXi03) 接收并解封装 Geneve 封装的数据包。

- 基于 Geneve 标头内的 VNI,流量被转发到目标 VM4。

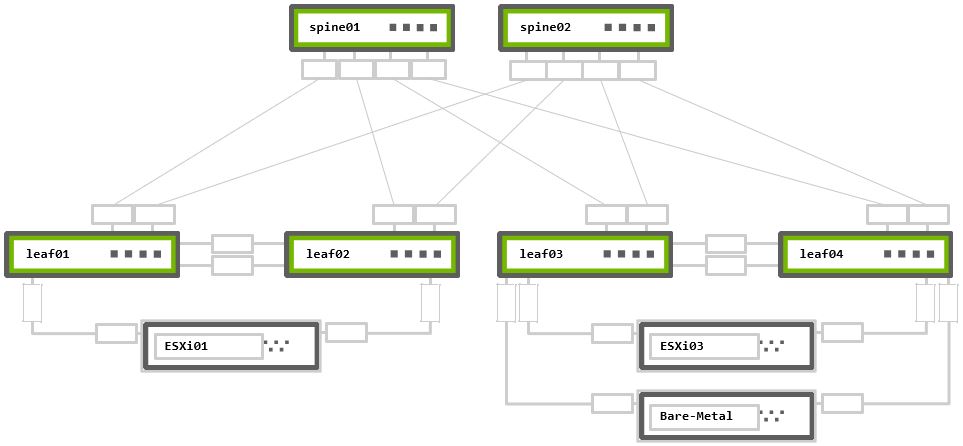

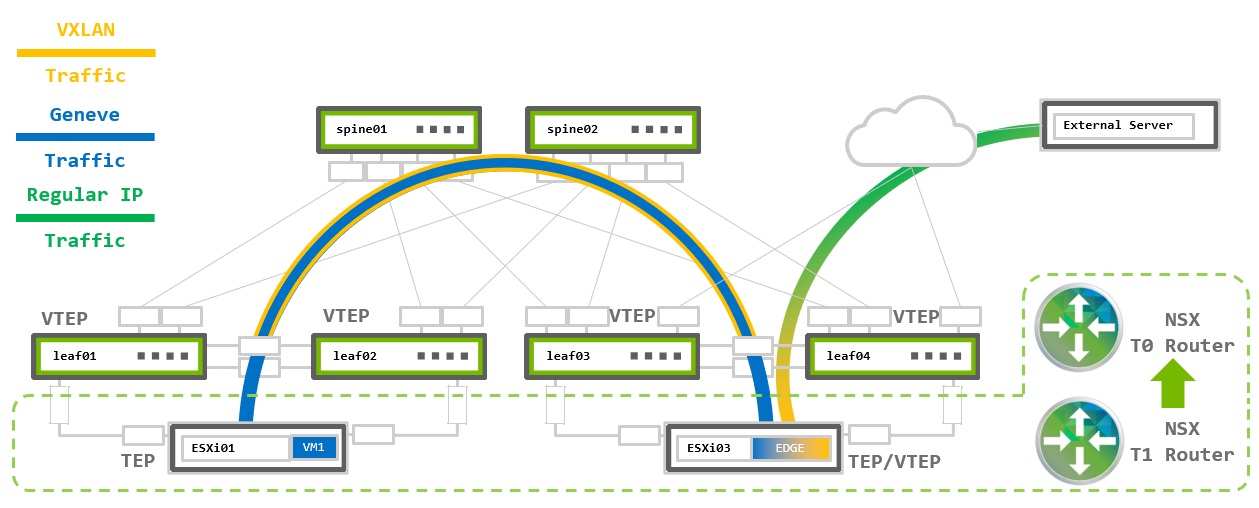

虚拟化和裸机服务器环境

此用例涵盖了 VMware 虚拟化环境,需要连接到裸机 (BM) 服务器。这可能是当虚拟化环境部署为现有 Fabric(棕地)的一部分,并且 VM 需要与传统服务器或任何其他不运行 VM 的服务器(不是虚拟化世界的一部分)进行通信时。

在 VM 需要与非 NSX(裸机)服务器通信的情况下,需要部署 NSX Edge。NSX Edge 是虚拟化 Geneve Overlay 和外部物理底层之间的网关。它充当 TEP 设备和底层路由器,通过与底层 Fabric 建立 BGP(或 OSPF)对等互连来路由进出虚拟化环境的流量。

有一种使用 Geneve 封装进行 VM 到裸机通信的选项,在 裸机上的 NSX Edge 中对此进行了描述。这超出了本指南的范围。

示例配置基于以下拓扑

机架 1 – MLAG 中的两台 NVIDIA 交换机 + 一台以主动-主动绑定方式连接的 ESXi hypervisor

机架 2 – MLAG 中的两台 NVIDIA 交换机 + 一台 ESXi hypervisor 和一台裸机服务器,两者均以主动-主动绑定方式连接

支持 NSX Edge 节点的配置与之前描述的现有 ESXi 配置几乎相同。下面仅描述支持 NSX Edge 设备所需的更改。

VRR 配置

NSX Edge 使用两个额外的虚拟上行链路 (VLAN) 与物理世界通信。一个 VLAN 用于提供与底层物理网络的连接,而第二个 VLAN 连接到虚拟 Overlay 网络。NSX Edge 节点不支持 LACP 绑定。为了提供负载均衡,每个 VLAN 将通过单个链路配置到单个架顶交换机。

以下示例使用子接口。

VLAN 也可配置用于 NSX Edge 节点连接。选择 VLAN 或子接口没有技术原因。

cumulus@leaf03:mgmt:~$ nv set bridge domain br_default vlan 100 ### TEP VLAN

cumulus@leaf03:mgmt:~$ nv set interface vlan100 ip address 10.2.2.252/24 ### TEP SVI

cumulus@leaf03:mgmt:~$ nv set interface vlan100 ip vrr address 10.2.2.254/24 ### TEP VRR IP (GW)

cumulus@leaf03:mgmt:~$ nv set interface vlan100 ip vrr mac-address 00:00:00:01:00:00 ### TEP VRR MAC (GW)

cumulus@leaf03:mgmt:~$ nv set bridge domain br_default vlan 200 ### BM VLAN

cumulus@leaf03:mgmt:~$ nv set interface vlan200 ip address 192.168.0.252/24 ### BM SVI

cumulus@leaf03:mgmt:~$ nv set interface vlan200 ip vrr address 192.168.0.254/24 ### BM VRR IP (GW)

cumulus@leaf03:mgmt:~$ nv set interface vlan200 ip vrr mac-address 00:00:00:19:21:68 ### BM VRR MAC (GW)

cumulus@leaf03:mgmt:~$ nv set interface swp1.30 type sub ### Edge VLAN30 subinterface

cumulus@leaf03:mgmt:~$ nv set interface swp1.30 ip address 10.30.0.254/24 ### Edge VLAN30 subinterface SVI

cumulus@leaf03:mgmt:~$ nv config apply -y

cumulus@leaf03:mgmt:~$ nv config save

cumulus@leaf04:mgmt:~$ nv set bridge domain br_default vlan 100 ### TEP VLAN

cumulus@leaf04:mgmt:~$ nv set interface vlan100 ip address 10.2.2.253/24 ### TEP SVI

cumulus@leaf04:mgmt:~$ nv set interface vlan100 ip vrr address 10.2.2.254/24 ### TEP VRR IP (GW)

cumulus@leaf04:mgmt:~$ nv set interface vlan100 ip vrr mac-address 00:00:00:01:00:00 ### TEP VRR MAC (GW)

cumulus@leaf04:mgmt:~$ nv set bridge domain br_default vlan 200 ### BM VLAN

cumulus@leaf04:mgmt:~$ nv set interface vlan200 ip address 192.168.0.253/24 ### BM SVI

cumulus@leaf04:mgmt:~$ nv set interface vlan200 ip vrr address 192.168.0.254/24 ### BM VRR IP (GW)

cumulus@leaf04:mgmt:~$ nv set interface vlan200 ip vrr mac-address 00:00:00:19:21:68 ### BM VRR MAC (GW)

cumulus@leaf04:mgmt:~$ nv set interface swp1.31 type sub ### Edge VLAN31 subinterface

cumulus@leaf04:mgmt:~$ nv set interface swp1.31 ip address 10.31.0.254/24 ### Edge VLAN31 subinterface SVI

cumulus@leaf04:mgmt:~$ nv config apply -y

cumulus@leaf04:mgmt:~$ nv config save

VRR 和子接口配置验证

nv show interface 输出将 SVI 和 VRR 接口显示为 vlanXXX 和 vlanXXX-v0。它将子接口显示为 swpX.xx

cumulus@leaf03:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

---------------- ----- ----- ----- --------------- ----------------- -------- -------------------------------

+ esxi03 9216 1G up bond

+ eth0 1500 1G up oob-mgmt-switch swp12 eth IP Address: 192.168.200.13/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ peerlink 9216 2G up bond

+ peerlink.4094 9216 up sub

+ swp1 9216 1G up esxi03 44:38:39:00:00:3e swp

+ swp1.30 9216 up sub IP Address: 10.30.0.254/24

+ swp49 9216 1G up leaf04 swp49 swp

+ swp50 9216 1G up leaf04 swp50 swp

+ swp51 9216 1G up spine01 swp3 swp

+ swp52 9216 1G up spine02 swp3 swp

+ vlan100 9216 up svi IP Address: 10.2.2.252/24

+ vlan100-v0 9216 up svi IP Address: 10.2.2.254/24

+ vlan200 9216 up svi IP Address: 192.168.0.252/24

+ vlan200-v0 9216 up svi IP Address: 192.168.0.254/24

cumulus@leaf04:mgmt:~$ nv show interface

Interface MTU Speed State Remote Host Remote Port Type Summary

---------------- ----- ----- ----- --------------- ----------------- -------- -------------------------------

+ esxi03 9216 1G up bond

+ eth0 1500 1G up oob-mgmt-switch swp13 eth IP Address: 192.168.200.14/24

+ lo 65536 up loopback IP Address: 127.0.0.1/8

lo IP Address: ::1/128

+ peerlink 9216 2G up bond

+ peerlink.4094 9216 up sub

+ swp1 9216 1G up esxi03 44:38:39:00:00:44 swp

+ swp1.30 9216 up sub IP Address: 10.31.0.254/24

+ swp49 9216 1G up leaf03 swp49 swp

+ swp50 9216 1G up leaf03 swp50 swp

+ swp51 9216 1G up spine01 swp4 swp

+ swp52 9216 1G up spine02 swp4 swp

+ vlan100 9216 up svi IP Address: 10.2.2.253/24

+ vlan100-v0 9216 up svi IP Address: 10.2.2.254/24

+ vlan200 9216 up svi IP Address: 192.168.0.253/24

+ vlan200-v0 9216 up svi IP Address: 192.168.0.254/24

BGP 底层网络配置

本指南中的所有底层 IP Fabric BGP 对等互连都使用 eBGP 作为配置的基础。示例配置使用 Cumulus Linux Auto BGP 和 BGP Unnumbered 配置。

您必须在 leaf03 和 leaf04 上配置具有唯一 IPv4 地址的子接口。NSX Edge 节点不支持 BGP Unnumbered。

Auto BGP 配置仅在使用 NVUE 时可用。如果您想使用 vtysh 配置,则必须配置 BGP ASN。

有关更多详细信息,请参阅 配置 FRRouting 文档。

与之前的配置相比,唯一的更改是从 Leaf 节点到 Edge 节点服务器添加编号的 BGP 对等互连。

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp autonomous-system leaf

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp neighbor peerlink.4094 remote-as external

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp neighbor swp51 remote-as external

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp neighbor swp52 remote-as external

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp neighbor 10.30.0.1 remote-as 65555 ### BGP to Edge VM in ASN 65555

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp router-id 10.10.10.3

cumulus@leaf03:mgmt:~$ nv config apply -y

cumulus@leaf03:mgmt:~$ nv config save

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp autonomous-system leaf

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp neighbor peerlink.4094 remote-as external

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp neighbor swp51 remote-as external

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp neighbor swp52 remote-as external

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp neighbor 10.31.0.1 remote-as 65555 ### BGP to Edge VM in ASN 65555

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp router-id 10.10.10.4

cumulus@leaf04:mgmt:~$ nv config apply -y

cumulus@leaf04:mgmt:~$ nv config save

cumulus@leaf03:~$ sudo vtysh

leaf03# configure terminal

leaf03(config)# router bgp 65103

leaf03(config-router)# bgp router-id 10.10.10.3

leaf03(config-router)# neighbor peerlink.4094 interface remote-as external

leaf03(config-router)# neighbor swp51 remote-as external

leaf03(config-router)# neighbor swp52 remote-as external

leaf03(config-router)# neighbor 10.30.0.1 remote-as 65555

leaf03(config-router)# end

leaf03# write memory

leaf03# exit

cumulus@leaf04:~$ sudo vtysh

leaf04# configure terminal

leaf04(config)# router bgp 65101

leaf04(config-router)# bgp router-id 10.10.10.4

leaf04(config-router)# neighbor peerlink.4094 interface remote-as external

leaf04(config-router)# neighbor swp51 remote-as external

leaf04(config-router)# neighbor swp52 remote-as external

leaf04(config-router)# neighbor 10.31.0.1 remote-as 65555

leaf04(config-router)# end

leaf04# write memory

leaf04# exit

BGP 对等互连和路由通告验证

要验证是否已正确建立所有 BGP 对等互连,请在 NVUE 中使用 net show bgp summary 命令,或在 vtysh 中使用 show ip bgp summary。

到 Edge 节点的编号 BGP 对等互连应显示在 BGP 邻居列表中。

cumulus@leaf03:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.3, local AS number 4259632661 vrf-id 0

BGP table version 2

RIB entries 3, using 576 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf04(peerlink.4094) 4 4259632667 50465 50468 0 0 0 00:22:41 5 6

spine01(swp51) 4 4200000000 50480 50503 0 0 0 00:22:54 3 6

spine02(swp52) 4 4200000000 50466 50491 0 0 0 00:22:35 4 6

10.30.0.1 4 65555 1023 1035 0 0 0 00:11:23 2 6

Total number of neighbors 3

cumulus@leaf04:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.4, local AS number 4259632667 vrf-id 0

BGP table version 3

RIB entries 3, using 576 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf03(peerlink.4094) 4 4259632661 25231 25236 0 0 0 00:25:44 5 6

spine01(swp51) 4 4200000000 25232 25236 0 0 0 00:25:45 5 6

spine02(swp52) 4 4200000000 25233 25228 0 0 0 00:25:41 4 6

10.31.0.1 4 65555 1056 1076 0 0 0 00:11:35 2 6

Total number of neighbors 3

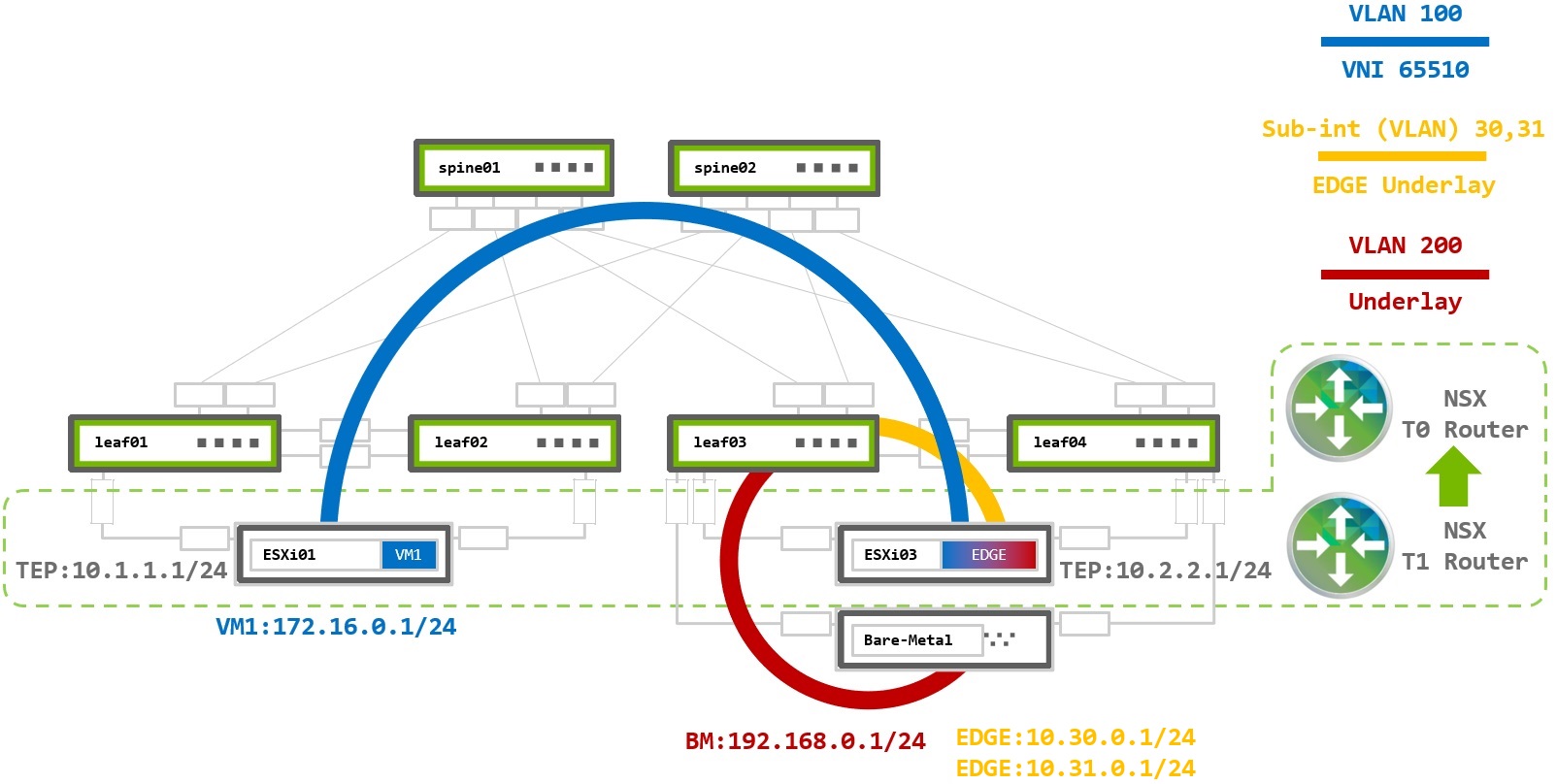

流量流

此场景检查了分配给逻辑网段 VLAN100 的 VM(在虚拟化网络中)、VLAN200 中的裸机服务器(在底层网络中)以及位于 ESXi03 主机上的 NSX Edge VM。Edge VM 是虚拟网络和物理网络之间的网关(VMware 术语中的“南北向”流量)。它在 VLAN 30 和 31 中使用两个逻辑上行链路,这两个逻辑上行链路具有与底层 Leaf 交换机的 BGP 对等互连,以路由 VM 到裸机的流量。

此图还具有 Tier 0 和 Tier 1 路由器。T0 路由器与 Leaf 交换机具有 BGP 对等互连,并通告 Overlay 网络路由。T1 路由器是虚拟主机的网关。

T0 路由器具有到 T1 的逻辑下行链路和通过 VLAN30 和 VLAN31 到物理世界的两个逻辑上行链路。

T1 路由器具有到虚拟网段的逻辑下行链路和到 T0 路由器的逻辑上行链路。

NSX 自动创建 T1-T0 路由器之间的链路,并且 T0 路由器必须将它们通告到底层 BGP 网络。

NSX Edge VM 在每个 Overlay 和 VLAN 传输区域中都有虚拟 NIC (vNIC)。它通过 Overlay TZ 中的 TEP 设备接收 Geneve 封装的流量(东西向流量),并通过 T0(南北向流量)将其转发到 VLAN TZ。

作为示例流量流,ESXi01 上 172.16.0.1 的 VM1 向裸机服务器 192.168.0.1 发送流量

- 来自 VM1 的数据包到达本地 T1 路由器,即 VM1 的默认网关。

- T1 路由器检查其路由表以确定目标路径。

- 裸机子网

192.168.0.0/24是从 T0 路由器接收的,并通过 Geneve 隧道成为下一跳。 - ESXi01 上的本地 TEP 使用 VNI

65010将VLAN100网段的数据包封装到 Geneve 中。目标是 Edge 上的 T0 路由器,ESXi03。 - 数据包以 TEP 设备

10.1.1.1和10.2.2.1的源 IP 和目标 IP 发送到 ESXi03。 - Edge TEP 解封装数据包并将其转发到也位于主机 ESXi03 内的 T0 路由器。

- T0 路由器接收目标地址为

192.168.0.1的 IP 数据包,并通过 Leaf03 上的 BGP 对等互连作为下一跳。 - 数据包在 VLAN30 中发送到 Leaf03,然后在 VLAN200 中路由并交付到裸机服务器。

基于 EVPN Fabric 的虚拟化环境

EVPN 底层 Fabric

之前的示例讨论了在纯 IP Fabric 上部署 NSX。现代数据中心通常使用 EVPN 来支持多租户和二层扩展,而无需 VMware NSX。当 NSX 部署在 EVPN Fabric 上时,它的工作方式与部署在纯 IP Fabric 中时相同。NSX 独立于 EVPN 运行

当使用 EVPN 底层 Fabric 时,NSX 生成的 Geneve 数据包在 Leaf 交换机上封装到 VXLAN 数据包中,并通过网络传输。当使用 EVPN 部署时,简单的部署选项是将所有 TEP 地址配置在同一子网中,并使用 VXLAN 二层扩展来提供 TEP 到 TEP 的连接。

可以在 EVPN 网络中的 TEP 设备之间使用唯一的子网,但是,必须在底层网络中配置 VXLAN 路由。此部署模型超出了本指南的范围。有关更多信息,请参考 EVPN 子网间路由 文档。

以下交换机配置基于具有 VXLAN 主动-主动模式 的二层桥接,通过 BGP-EVPN 底层。

TEP VLAN 配置

当所有 TEP IP 地址都存在于同一子网中时,不需要 TEP VLAN 默认网关。

为了使 VXLAN 封装的流量到达另一侧的相应 VLAN,TEP VLAN 必须映射到连接到 ESXi 主机的所有 ToR 交换机上的同一 VNI,虚拟化流量可能会发送到这些主机。

VXLAN 隧道端点 (VTEP) 使用本地环回 IP 地址作为隧道源和目标。必须在所有 Leaf 交换机上配置具有唯一 IP 地址的环回接口,然后通过 BGP 通告到底层网络中。当使用 MLAG 时,这称为 VXLAN 主动-主动,并且必须使用 nve vxlan mlag shared-address 命令配置 MLAG Anycast-IP。这会导致 MLAG 对等方具有相同的 VTEP IP 地址,该地址用作入站 VXLAN 隧道目标。

Spine 交换机不需要任何 VXLAN 特定配置,但必须启用 BGP l2vpn-evpn 地址族与 Leaf 对等方一起通告 EVPN 路由。

EVPN MLAG 配置

无需修改即可为 EVPN 配置 MLAG。有关特定 MLAG 配置,请参考之前的 MLAG 配置 部分。

对于非 MLAG 上行链路,请使用纯虚拟化环境中的接口配置 交换机端口配置 - 非 LAG N-VDS 上行链路配置文件。

VXLAN-EVPN 配置

每个叶子节点都需要一个唯一的环回 IP 地址,以便通告到底层网络中。它将用作 NVE 源地址来建立 VXLAN 隧道。控制平面基于 BGP EVPN 通告。

必须在两个 MLAG 对等体的 NVE 接口上配置 nve vxlan mlag shared-address,以便在虚拟化网络中将它们识别为一个。分配给 MLAG 链路的 VLAN (在我们的示例中为 VLAN 100) 必须映射到所有 ToR 交换机上的相同 VNI。

配置环回 IP 地址、NVE 接口和 VLAN 到 VNI 的映射

配置环回 IP 地址和 MLAG 的 VXLAN 共享地址

cumulus@leaf01:mgmt:~$ nv set interface lo ip address 10.10.10.1/32

cumulus@leaf01:mgmt:~$ nv set nve vxlan mlag shared-address 10.0.1.1

创建具有所有必需参数的 NVE 接口,并将 VLAN 100 映射到 VNI 以创建 VXLAN 隧道

cumulus@leaf01:mgmt:~$ nv set nve vxlan enable on

cumulus@leaf01:mgmt:~$ nv set bridge domain br_default vlan 100 vni 100100

cumulus@leaf01:mgmt:~$ nv set nve vxlan source address 10.10.10.1

cumulus@leaf01:mgmt:~$ nv set nve vxlan arp-nd-suppress on

cumulus@leaf01:mgmt:~$ nv config apply -y

cumulus@leaf01:mgmt:~$ nv config save

配置环回 IP 地址和 MLAG 的 VXLAN 共享地址

cumulus@leaf02:mgmt:~$ nv set interface lo ip address 10.10.10.2/32

cumulus@leaf02:mgmt:~$ nv set nve vxlan mlag shared-address 10.0.1.1

创建具有所有必需参数的 NVE 接口,并将 VLAN 100 映射到 VNI 以创建 VXLAN 隧道

cumulus@leaf02:mgmt:~$ nv set nve vxlan enable on

cumulus@leaf02:mgmt:~$ nv set bridge domain br_default vlan 100 vni 100100

cumulus@leaf02:mgmt:~$ nv set nve vxlan source address 10.10.10.2

cumulus@leaf02:mgmt:~$ nv set nve vxlan arp-nd-suppress on

cumulus@leaf02:mgmt:~$ nv config apply -y

cumulus@leaf02:mgmt:~$ nv config save

配置环回 IP 地址和 MLAG 的 VXLAN 共享地址

cumulus@leaf03:mgmt:~$ nv set interface lo ip address 10.10.10.3/32

cumulus@leaf03:mgmt:~$ nv set nve vxlan mlag shared-address 10.0.1.2

创建具有所有必需参数的 NVE 接口,并将 VLAN 100 映射到 VNI 以创建 VXLAN 隧道

cumulus@leaf03:mgmt:~$ nv set nve vxlan enable on

cumulus@leaf03:mgmt:~$ nv set bridge domain br_default vlan 100 vni 100100

cumulus@leaf03:mgmt:~$ nv set nve vxlan source address 10.10.10.3

cumulus@leaf03:mgmt:~$ nv set nve vxlan arp-nd-suppress on

cumulus@leaf03:mgmt:~$ nv config apply -y

cumulus@leaf03:mgmt:~$ nv config save

配置环回 IP 地址和 MLAG 的 VXLAN 共享地址

cumulus@leaf04:mgmt:~$ nv set interface lo ip address 10.10.10.4/32

cumulus@leaf04:mgmt:~$ nv set nve vxlan mlag shared-address 10.0.1.2

创建具有所有必需参数的 NVE 接口,并将 VLAN 100 映射到 VNI 以创建 VXLAN 隧道

cumulus@leaf04:mgmt:~$ nv set nve vxlan enable on

cumulus@leaf04:mgmt:~$ nv set bridge domain br_default vlan 100 vni 100100

cumulus@leaf04:mgmt:~$ nv set nve vxlan source address 10.10.10.4

cumulus@leaf04:mgmt:~$ nv set nve vxlan arp-nd-suppress on

cumulus@leaf04:mgmt:~$ nv config apply -y

cumulus@leaf04:mgmt:~$ nv config save

BGP-EVPN 对等配置

配置 EVPN 控制平面,以通过第 3 层结构网络通告 L2 信息。将所有 BGP 邻居设置为使用 l2vpn-evpn 地址族。

在多个 VRF 的情况下,必须为每个 VRF 完成此配置。

cumulus@leaf01:mgmt:~$ nv set evpn enable on

cumulus@leaf01:mgmt:~$ nv set vrf default router bgp address-family l2vpn-evpn enable on

cumulus@leaf01:mgmt:~$ nv set vrf default router neighbor peerlink.4094 address-family l2vpn-evpn enable on

cumulus@leaf01:mgmt:~$ nv set vrf default router neighbor swp51 address-family l2vpn-evpn enable on

cumulus@leaf01:mgmt:~$ nv set vrf default router neighbor swp52 address-family l2vpn-evpn enable on

cumulus@leaf01:mgmt:~$ nv config apply -y

cumulus@leaf01:mgmt:~$ nv config save

cumulus@leaf02:mgmt:~$ nv set evpn enable on

cumulus@leaf02:mgmt:~$ nv set vrf default router bgp address-family l2vpn-evpn enable on

cumulus@leaf02:mgmt:~$ nv set vrf default router neighbor peerlink.4094 address-family l2vpn-evpn enable on

cumulus@leaf02:mgmt:~$ nv set vrf default router neighbor swp51 address-family l2vpn-evpn enable on

cumulus@leaf02:mgmt:~$ nv set vrf default router neighbor swp52 address-family l2vpn-evpn enable on

cumulus@leaf02:mgmt:~$ nv config apply -y

cumulus@leaf02:mgmt:~$ nv config save

cumulus@leaf03:mgmt:~$ nv set evpn enable on

cumulus@leaf03:mgmt:~$ nv set vrf default router bgp address-family l2vpn-evpn enable on

cumulus@leaf03:mgmt:~$ nv set vrf default router neighbor peerlink.4094 address-family l2vpn-evpn enable on

cumulus@leaf03:mgmt:~$ nv set vrf default router neighbor swp51 address-family l2vpn-evpn enable on

cumulus@leaf03:mgmt:~$ nv set vrf default router neighbor swp52 address-family l2vpn-evpn enable on

cumulus@leaf03:mgmt:~$ nv config apply -y

cumulus@leaf03:mgmt:~$ nv config save

cumulus@leaf04:mgmt:~$ nv set evpn enable on

cumulus@leaf04:mgmt:~$ nv set vrf default router bgp address-family l2vpn-evpn enable on

cumulus@leaf04:mgmt:~$ nv set vrf default router neighbor peerlink.4094 address-family l2vpn-evpn enable on

cumulus@leaf04:mgmt:~$ nv set vrf default router neighbor swp51 address-family l2vpn-evpn enable on

cumulus@leaf04:mgmt:~$ nv set vrf default router neighbor swp52 address-family l2vpn-evpn enable on

cumulus@leaf04:mgmt:~$ nv config apply -y

cumulus@leaf04:mgmt:~$ nv config save

cumulus@spine01:mgmt:~$ nv set evpn enable on

cumulus@spine01:mgmt:~$ nv set vrf default router bgp address-family l2vpn-evpn enable on

cumulus@spine01:mgmt:~$ nv set vrf default router neighbor swp1 address-family l2vpn-evpn enable on

cumulus@spine01:mgmt:~$ nv set vrf default router neighbor swp2 address-family l2vpn-evpn enable on

cumulus@spine01:mgmt:~$ nv set vrf default router neighbor swp3 address-family l2vpn-evpn enable on

cumulus@spine01:mgmt:~$ nv set vrf default router neighbor swp4 address-family l2vpn-evpn enable on

cumulus@spine01:mgmt:~$ nv config apply -y

cumulus@spine01:mgmt:~$ nv config save

cumulus@spine02:mgmt:~$ nv set evpn enable on

cumulus@spine02:mgmt:~$ nv set vrf default router bgp address-family l2vpn-evpn enable on

cumulus@spine02:mgmt:~$ nv set vrf default router neighbor swp1 address-family l2vpn-evpn enable on

cumulus@spine02:mgmt:~$ nv set vrf default router neighbor swp2 address-family l2vpn-evpn enable on

cumulus@spine02:mgmt:~$ nv set vrf default router neighbor swp3 address-family l2vpn-evpn enable on

cumulus@spine02:mgmt:~$ nv set vrf default router neighbor swp4 address-family l2vpn-evpn enable on

cumulus@spine02:mgmt:~$ nv config apply -y

cumulus@spine02:mgmt:~$ nv config save

cumulus@leaf01:~$ sudo vtysh

leaf01# configure terminal

leaf01(config)# router bgp 65101

leaf01(config-router)# address-family l2vpn evpn

leaf01(config-router-af)# neighbor peerlink.4094 activate

leaf01(config-router-af)# neighbor swp51 activate

leaf01(config-router-af)# neighbor swp52 activate

leaf01(config-router-af)# advertise-all-vni

leaf01(config-router-af)# end

leaf01# write memory

leaf01# exit

cumulus@leaf03:~$ sudo vtysh

leaf02# configure terminal

leaf02(config)# router bgp 65102

leaf02(config-router)# address-family l2vpn evpn

leaf02(config-router-af)# neighbor peerlink.4094 activate

leaf02(config-router-af)# neighbor swp51 activate

leaf02(config-router-af)# neighbor swp52 activate

leaf02(config-router-af)# advertise-all-vni

leaf02(config-router-af)# end

leaf02# write memory

leaf02# exit

cumulus@leaf03:~$ sudo vtysh

leaf03# configure terminal

leaf03(config)# router bgp 65103

leaf03(config-router)# address-family l2vpn evpn

leaf03(config-router-af)# neighbor peerlink.4094 activate

leaf03(config-router-af)# neighbor swp51 activate

leaf03(config-router-af)# neighbor swp52 activate

leaf03(config-router-af)# advertise-all-vni

leaf03(config-router-af)# end

leaf03# write memory

leaf03# exit

cumulus@leaf04:~$ sudo vtysh

leaf04# configure terminal

leaf04(config)# router bgp 65101

leaf04(config-router)# address-family l2vpn evpn

leaf04(config-router-af)# neighbor peerlink.4094 activate

leaf04(config-router-af)# neighbor swp51 activate

leaf04(config-router-af)# neighbor swp52 activate

leaf04(config-router-af)# advertise-all-vni

leaf04(config-router-af)# end

leaf04# write memory

leaf04# exit

cumulus@spine01:~$ sudo vtysh

spine01# configure terminal

spine01(config)# router bgp 65199

spine01(config-router)# address-family l2vpn evpn

spine01(config-router-af)# neighbor swp1 activate

spine01(config-router-af)# neighbor swp2 activate

spine01(config-router-af)# neighbor swp3 activate

spine01(config-router-af)# neighbor swp4 activate

spine01(config-router-af)# end

spine01# write memory

spine01# exit

cumulus@spine02:~$ sudo vtysh

spine02# configure terminal

spine02(config)# router bgp 65199

spine02(config-router)# address-family l2vpn evpn

spine02(config-router-af)# neighbor swp1 activate

spine02(config-router-af)# neighbor swp2 activate

spine02(config-router-af)# neighbor swp3 activate

spine02(config-router-af)# neighbor swp4 activate

spine02(config-router-af)# end

spine02# write memory

spine02# exit

VXLAN VTEP IP 地址通告到 BGP 和 VNI 到 EVPN

使用本地环回地址和 nve vxlan mlag shared-address 创建的 VXLAN 隧道必须可以通过使用 BGP IPv4 路由通告的底层结构网络访问。

您可以通过使用前面 BGP 配置 部分中描述的 redistribute connected 命令来完成此操作。

VXLAN-EVPN 配置验证

您可以使用 nv show nve 命令来验证 NVE 配置,该命令显示已创建的 NVE 接口、其运行状态、隧道源地址和 mlag 共享地址。

cumulus@leaf01:mgmt:~$ nv show nve

operational applied description

-------------------------- ----------- ---------- ----------------------------------------------------------------------

vxlan

enable on on Turn the feature 'on' or 'off'. The default is 'off'.

arp-nd-suppress on on Controls dynamic MAC learning over VXLAN tunnels based on received...

mac-learning off off Controls dynamic MAC learning over VXLAN tunnels based on received...

mtu 9216 9216 interface mtu

port 4789 4789 UDP port for VXLAN frames

flooding

enable on on Turn the feature 'on' or 'off'. The default is 'off'.

[head-end-replication] evpn evpn BUM traffic is replicated and individual copies sent to remote dest...

mlag

shared-address 10.0.1.1 10.0.1.1 shared anycast address for MLAG peers

source

address 10.10.10.1 10.10.10.1 IP addresses of this node's VTEP or 'auto'. If 'auto', use the pri...

cumulus@leaf02:mgmt:~$ nv show nve

operational applied description

-------------------------- ----------- ---------- ----------------------------------------------------------------------

vxlan

enable on on Turn the feature 'on' or 'off'. The default is 'off'.

arp-nd-suppress on on Controls dynamic MAC learning over VXLAN tunnels based on received...

mac-learning off off Controls dynamic MAC learning over VXLAN tunnels based on received...

mtu 9216 9216 interface mtu

port 4789 4789 UDP port for VXLAN frames

flooding

enable on on Turn the feature 'on' or 'off'. The default is 'off'.

[head-end-replication] evpn evpn BUM traffic is replicated and individual copies sent to remote dest...

mlag

shared-address 10.0.1.1 10.0.1.1 shared anycast address for MLAG peers

source

address 10.10.10.2 10.10.10.2 IP addresses of this node's VTEP or 'auto'. If 'auto', use the pri...

cumulus@leaf03:mgmt:~$ nv show nve

operational applied description

-------------------------- ----------- ---------- ----------------------------------------------------------------------

vxlan

enable on on Turn the feature 'on' or 'off'. The default is 'off'.

arp-nd-suppress on on Controls dynamic MAC learning over VXLAN tunnels based on received...

mac-learning off off Controls dynamic MAC learning over VXLAN tunnels based on received...

mtu 9216 9216 interface mtu

port 4789 4789 UDP port for VXLAN frames

flooding

enable on on Turn the feature 'on' or 'off'. The default is 'off'.

[head-end-replication] evpn evpn BUM traffic is replicated and individual copies sent to remote dest...

mlag

shared-address 10.0.1.2 10.0.1.2 shared anycast address for MLAG peers

source

address 10.10.10.3 10.10.10.3 IP addresses of this node's VTEP or 'auto'. If 'auto', use the pri...

cumulus@leaf04:mgmt:~$ nv show nve

operational applied description

-------------------------- ----------- ---------- ----------------------------------------------------------------------

vxlan

enable on on Turn the feature 'on' or 'off'. The default is 'off'.

arp-nd-suppress on on Controls dynamic MAC learning over VXLAN tunnels based on received...

mac-learning off off Controls dynamic MAC learning over VXLAN tunnels based on received...

mtu 9216 9216 interface mtu

port 4789 4789 UDP port for VXLAN frames

flooding

enable on on Turn the feature 'on' or 'off'. The default is 'off'.

[head-end-replication] evpn evpn BUM traffic is replicated and individual copies sent to remote dest...

mlag

shared-address 10.0.1.2 10.0.1.2 shared anycast address for MLAG peers

source

address 10.10.10.4 10.10.10.4 IP addresses of this node's VTEP or 'auto'. If 'auto', use the pri...

MLAG 和 VXLAN 接口配置验证

当 NVE 接口设置为 mlag 共享地址时,它在两个 MLAG 对等体上都是 active-active 状态。您可以通过运行 net show clag 来查看它以及其他 MLAG 链路。

cumulus@leaf01:mgmt:~$ net show clag

The peer is alive

Our Priority, ID, and Role: 1000 44:38:39:00:00:59 primary

Peer Priority, ID, and Role: 2000 44:38:39:00:00:5a secondary

Peer Interface and IP: peerlink.4094 fe80::4638:39ff:fe00:5a (linklocal)

VxLAN Anycast IP: 10.0.1.1

Backup IP: 192.168.200.12 vrf mgmt (active)

System MAC: 44:38:39:ff:00:01

CLAG Interfaces

Our Interface Peer Interface CLAG Id Conflicts Proto-Down Reason

---------------- ---------------- ------- -------------------- -----------------

esxi01 esxi01 1 - -

vxlan48 vxlan48 - - -

cumulus@leaf02:mgmt:~$ net show clag

The peer is alive

Our Priority, ID, and Role: 2000 44:38:39:00:00:5a secondary

Peer Priority, ID, and Role: 1000 44:38:39:00:00:59 primary

Peer Interface and IP: peerlink.4094 fe80::4638:39ff:fe00:59 (linklocal)

VxLAN Anycast IP: 10.0.1.1

Backup IP: 192.168.200.11 vrf mgmt (active)

System MAC: 44:38:39:ff:00:01

CLAG Interfaces

Our Interface Peer Interface CLAG Id Conflicts Proto-Down Reason

---------------- ---------------- ------- -------------------- -----------------

esxi01 esxi01 1 - -

vxlan48 vxlan48 - - -

cumulus@leaf03:mgmt:~$ net show clag

The peer is alive

Our Priority, ID, and Role: 1000 44:38:39:00:00:5d primary

Peer Priority, ID, and Role: 2000 44:38:39:00:00:5e secondary

Peer Interface and IP: peerlink.4094 fe80::4638:39ff:fe00:5e (linklocal)

VxLAN Anycast IP: 10.0.1.2

Backup IP: 192.168.200.14 vrf mgmt (active)

System MAC: 44:38:39:ff:00:02

CLAG Interfaces

Our Interface Peer Interface CLAG Id Conflicts Proto-Down Reason

---------------- ---------------- ------- -------------------- -----------------

esxi03 esxi03 1 - -

vxlan48 vxlan48 - - -

cumulus@leaf04:mgmt:~$ net show clag

The peer is alive

Our Priority, ID, and Role: 2000 44:38:39:00:00:5e secondary

Peer Priority, ID, and Role: 1000 44:38:39:00:00:5d primary

Peer Interface and IP: peerlink.4094 fe80::4638:39ff:fe00:5d (linklocal)

VxLAN Anycast IP: 10.0.1.2

Backup IP: 192.168.200.13 vrf mgmt (active)

System MAC: 44:38:39:ff:00:02

CLAG Interfaces

Our Interface Peer Interface CLAG Id Conflicts Proto-Down Reason

---------------- ---------------- ------- -------------------- -----------------

esxi03 esxi03 1 - -

vxlan48 vxlan48 - - -

BGP-EVPN 对等和 VTEP 地址通告验证

BGP-EVPN 对等验证与 IPv4 验证类似。每个“地址族”列出已配置的邻居及其对等状态。在 NVUE 中使用 net show bgp summary 命令,或在 vtysh 中使用 show ip bgp summary 命令来查看 BGP 对等表。

cumulus@leaf01:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.1, local AS number 4259632651 vrf-id 0

BGP table version 96

RIB entries 11, using 2112 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf02(peerlink.4094) 4 4259632649 28055 28052 0 0 0 00:51:58 5 6

spine01(swp51) 4 4200000000 28066 28058 0 0 0 00:49:27 4 6

spine02(swp52) 4 4200000000 28070 28061 0 0 0 00:48:50 4 6

Total number of neighbors 3

show bgp l2vpn evpn summary

===========================

BGP router identifier 10.10.10.1, local AS number 4259632651 vrf-id 0

BGP table version 0

RIB entries 7, using 1344 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf02(peerlink.4094) 4 4259632649 28055 28052 0 0 0 00:51:58 2 3

spine01(swp51) 4 4200000000 28067 28059 0 0 0 00:49:27 2 3

spine02(swp52) 4 4200000000 28070 28061 0 0 0 00:48:50 2 3

Total number of neighbors 3

cumulus@leaf02:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.2, local AS number 4259632649 vrf-id 0

BGP table version 89

RIB entries 11, using 2112 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf01(peerlink.4094) 4 4259632651 28032 28045 0 0 0 00:51:23 5 6

spine01(swp51) 4 4200000000 28044 28044 0 0 0 00:48:53 5 6

spine02(swp52) 4 4200000000 28064 28050 0 0 0 00:48:16 5 6

Total number of neighbors 3

show bgp l2vpn evpn summary

===========================

BGP router identifier 10.10.10.2, local AS number 4259632649 vrf-id 0

BGP table version 0

RIB entries 7, using 1344 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf01(peerlink.4094) 4 4259632651 28032 28045 0 0 0 00:51:24 2 3

spine01(swp51) 4 4200000000 28044 28044 0 0 0 00:48:54 2 3

spine02(swp52) 4 4200000000 28064 28050 0 0 0 00:48:17 2 3

Total number of neighbors 3

cumulus@leaf03:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.3, local AS number 4259632661 vrf-id 0

BGP table version 85

RIB entries 11, using 2112 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf04(peerlink.4094) 4 4259632667 53298 53295 0 0 0 00:48:56 5 6

spine01(swp51) 4 4200000000 53309 53327 0 0 0 00:47:12 4 6

spine02(swp52) 4 4200000000 53317 53320 0 0 0 00:46:34 4 6

Total number of neighbors 3

show bgp l2vpn evpn summary

===========================

BGP router identifier 10.10.10.3, local AS number 4259632661 vrf-id 0

BGP table version 0

RIB entries 7, using 1344 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf04(peerlink.4094) 4 4259632667 53298 53295 0 0 0 00:48:57 2 3

spine01(swp51) 4 4200000000 53309 53327 0 0 0 00:47:13 2 3

spine02(swp52) 4 4200000000 53317 53320 0 0 0 00:46:35 2 3

Total number of neighbors 3

cumulus@leaf04:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.10.10.4, local AS number 4259632667 vrf-id 0

BGP table version 74

RIB entries 11, using 2112 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf03(peerlink.4094) 4 4259632661 27978 27990 0 0 0 00:48:07 5 6

spine01(swp51) 4 4200000000 27997 27994 0 0 0 00:46:23 5 6

spine02(swp52) 4 4200000000 28009 27995 0 0 0 00:45:45 5 6

Total number of neighbors 3

show bgp l2vpn evpn summary

===========================

BGP router identifier 10.10.10.4, local AS number 4259632667 vrf-id 0

BGP table version 0

RIB entries 7, using 1344 bytes of memory

Peers 3, using 64 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

leaf03(peerlink.4094) 4 4259632661 27978 27990 0 0 0 00:48:07 2 3